Recursive Language Models (RLMs): From MIT’s Blueprint to Prime Intellect’s RLMEnv for Long Horizon LLM Agents

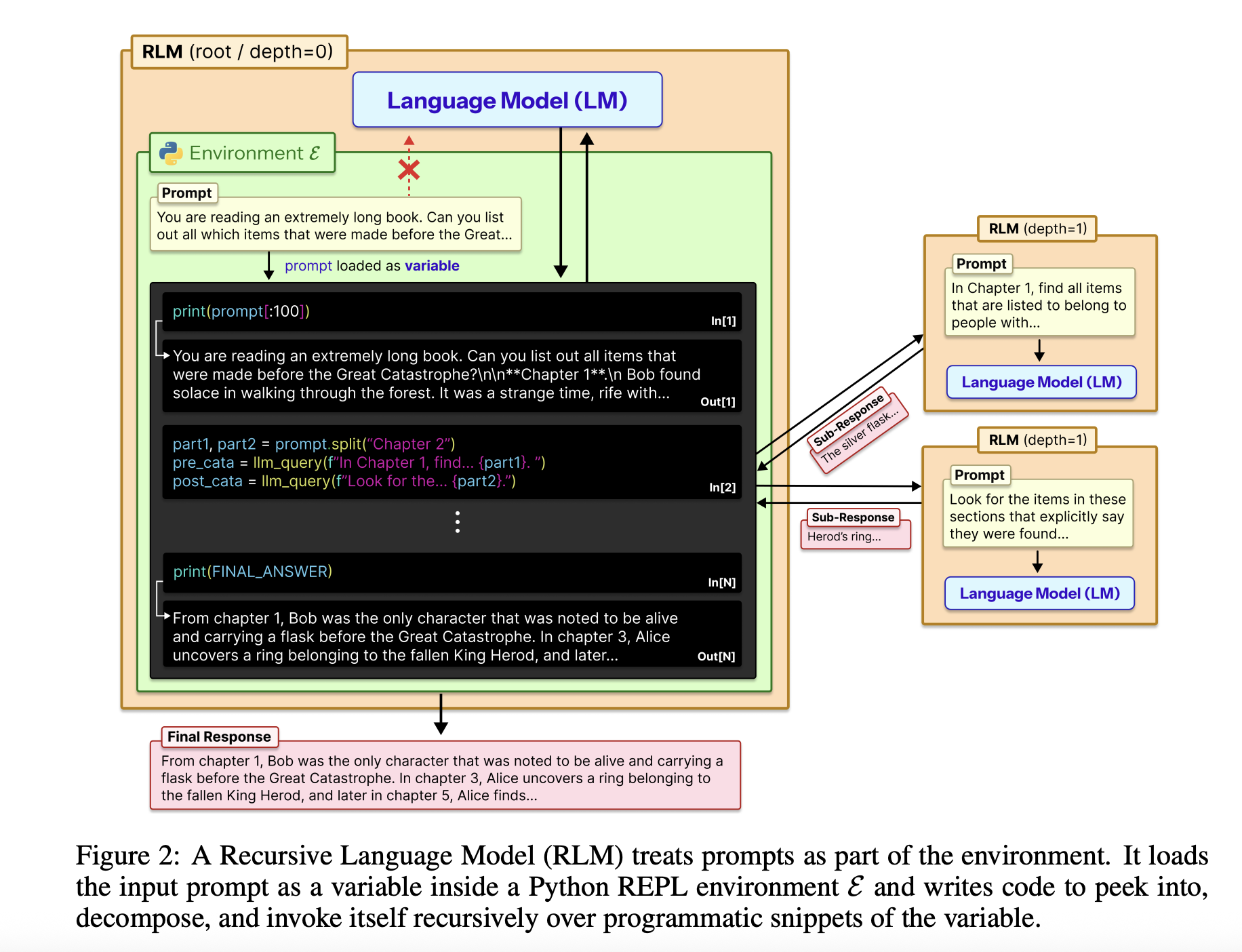

Recursive Language Models aim to overcome the common trade-offs between context length, accuracy and cost of large language models. Instead of forcing the model to read a large instruction in one pass, RLMs treat the information as external and let the model decide how to code it, then call it back in small chunks.

The basics

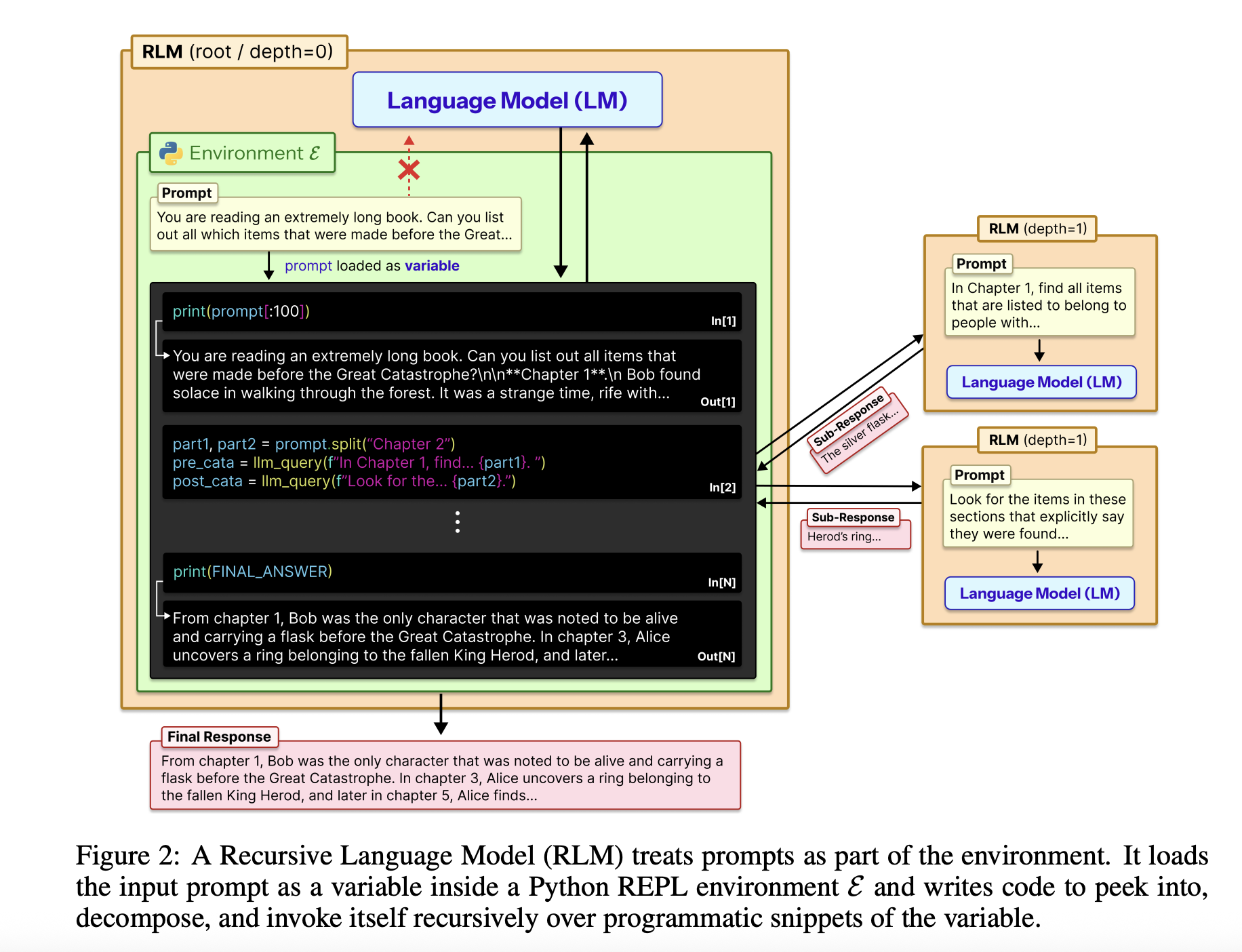

The full input is loaded into the Python REPL as a single string variable. The root model, for example GPT-5, never sees that string directly in its context. Instead, it receives a system command that describes how to read variable bits, write helper functions, issue LLM calls, and compile the results. The model returns the last text response, so the external interface is always the same as the normal conversation endpoint.

The RLM design uses the REPL as the control plane for the long context. The environment, often written in Python, features tools such as string trimming, regex searching and helper functions such as llm_query calling themselves a small model, for example GPT-5-mini. The root model writes the code that calls these helpers to scan, parse and summarize a variety of external content. The code can store intermediate results in variables and build the final answer step by step. This structure makes the data size independent of the model context window and turns long content management into a programming problem.

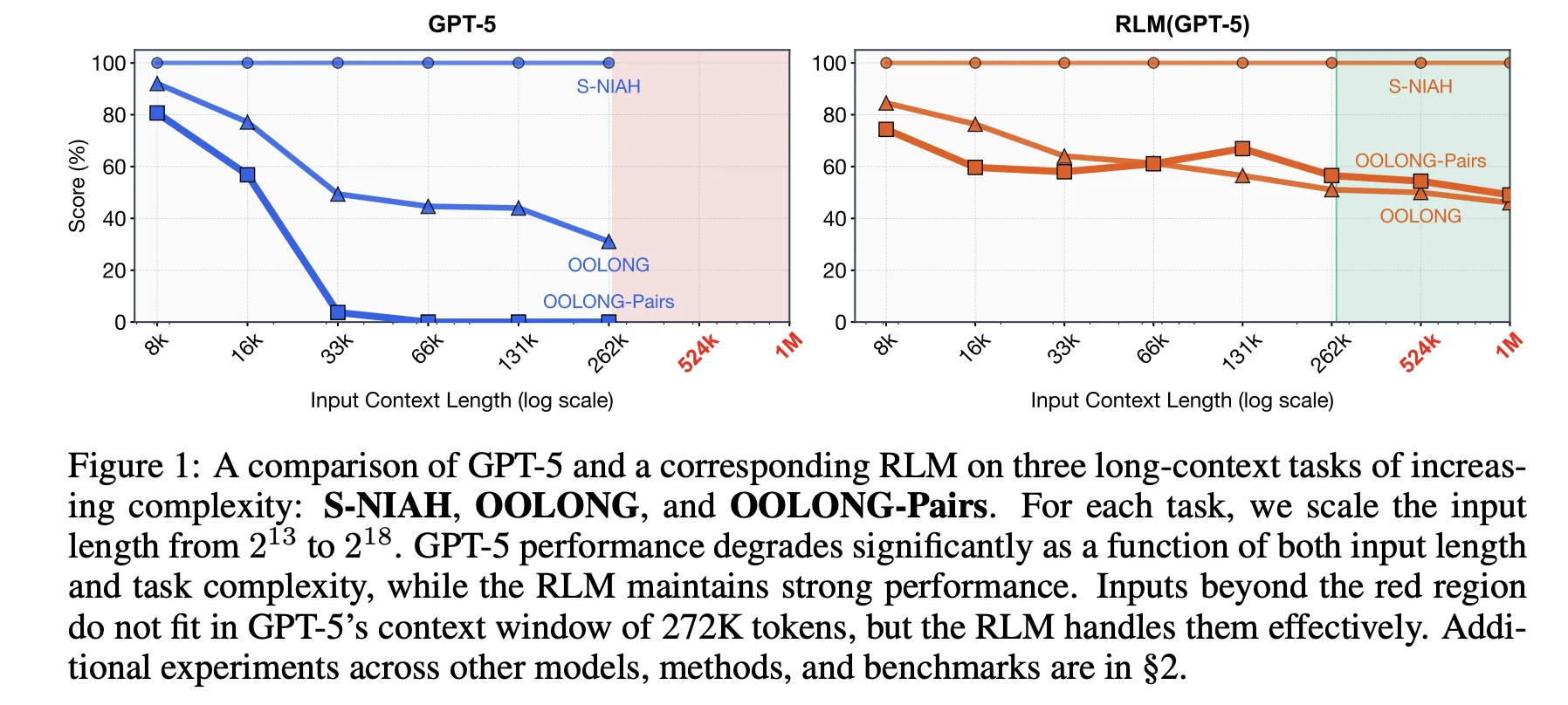

Where does it stand on the test?

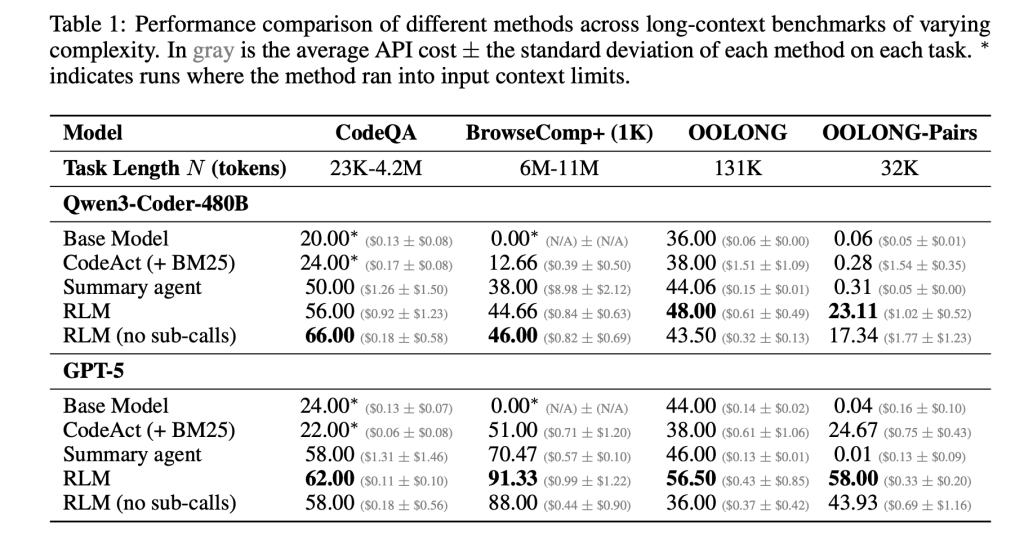

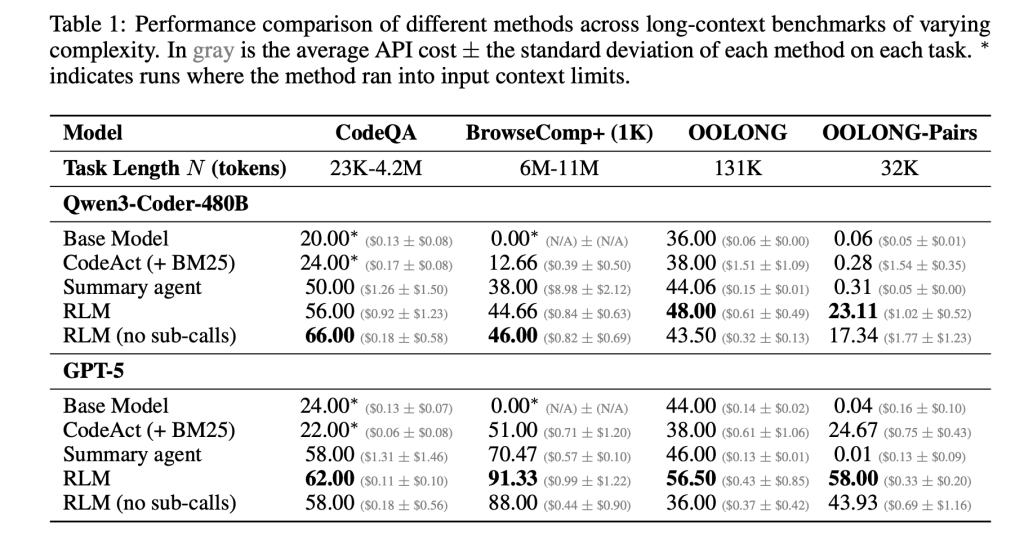

The research paper tests this idea with long-term benchmarks with different computing architectures. NIAH is a complex ongoing needle in the haystack. BrowseComp-Plus is a multi-style web query that benchmarks over 1,000 documents. OOLONG is a complex linear reasoning function with a long context where the model has to transform multiple entries and combine them. OOLONG Pairs increases the difficulty by combining four doubles over input. These tasks emphasize both the length of the context and the depth of thinking, not just retrieval.

In these benchmarks, RLMs offer significant accuracy gains over direct LLM calls and long context general agents. In GPT-5 in CodeQA, a long question answering setup question, the base model reaches 24.00 accuracy, the summarization agent reaches 41.33, while the RLM reaches 62.00 and the RLM without iteration reaches 66.00. For Qwen3-Coder-480B-A35B, the base model gets 20.00, the CodeAct recovery agent 52.00, and the RLM 56.00 with only a different REPL at 44.66.

Profits are huge in the most difficult setting, OOLONG Pairs. For GPT-5, the exact model is almost impossible to use with an F1 equal to 0.04. Summary and CodeAct agents stay close to 0.01 and 24.67. The total RLM reaches 58.00 F1 and the non-replicating REPL variant still achieves 43.93. In Qwen3-Coder, the base model stays below 0.10 F1, while the full RLM reaches 23.11 and the REPL is only version 17.34. These numbers show that both REPL and small recursive calls are important for dense quadratic functions.

BrowseComp-Plus highlights the active context extension. The corpus ranges from about 6M to 11M tokens, which is 2 orders of magnitude beyond the 272k token core window of GPT-5. RLM with GPT 5 maintains strong performance even when served with 1,000 documents in a dynamic environment, while standard GPT-5 benchmarks decrease as the number of documents increases. In this benchmark, RLM GPT 5 achieves an accuracy of approximately 91.33 at an average cost of 0.99 USD per query, while a hypothetical model that reads the full context directly would cost between $1.50 and $2.75 at the current price.

The research paper also analyzes the trajectories of RLM running. Many patterns of behavior emerge. The model usually starts with a lookup step where it examines the first few thousand characters of the context. It then uses grep-style filtering with a regex or keyword search to narrow down the relevant lines. For complex queries, it divides the context into chunks and calls iterative LMs on each chunk to label or extract, followed by program compilation. For long output functions, RLM stores partial outputs in variables and merges them together, bypassing the output length limitations of the base model.

New from Prime Intellect

The Prime Intellect team has evolved this concept into a concrete environment, RLMEnv, integrated into their authentication stack and Environment Hub. In their design, the main RLM has only Python REPL, while the subordinate LLMs get heavy tools like web search or file access. REPL exposes the llm_batch work so that the root model can express many subqueries in parallel, and answer change where the final solution should be written and flagged as correct. This separates the output of the token-heavy tool from the main core and allows RLM to delegate expensive operations to sub-models.

Prime Intellect examines this implementation in four areas. DeepDive explores web research with search and open tools and pages with the largest voice. Math python exposes a Python REPL for hard competition-style math problems. Oolong reuses the long context benchmark within RLMEnv. Verbatim Copy specializes in the reproduction of complex strings in all types of content such as JSON, CSV and mixed codes. In all these areas, the GPT-5-mini and the INTELECT-3-MoE model both benefit from the RLM scaffold in terms of success rate and robustness to very long conditions, especially when the output of the tool would disturb the context of the model.

The team of authors of the research paper and the team of Prime Intellect both emphasize that the current implementation is not fully optimized. RLM calls are synchronized, the recursion depth is limited and the cost distribution has heavy tails due to very long trajectories. The real opportunity is to combine RLM scaffolding with dedicated reinforcement learning so that models can better learn clustering policies, iterations and tool usage over time. When that happens, RLMs provide a framework where the development of basic models and system design translates directly into high-powered horizon agents that can consume 10M token spaces without context decay.

Key Takeaways

Here are 5 short things, which you can take under the heading.

- RLMs reconstruct the long context as a spatial variable: Recursive Language Models treat all data as external strings in a Python-style REPL, which LLM examines and transforms in code, instead of importing all tokens directly into the Transformer context.

- Multiplication of the inference time extends the context to 10M plus tokens: RLMs allow the root model to repeatedly call smaller LLMs on selected snippets of the context, allowing efficient processing of notifications up to about 2 orders of magnitude larger than the base context window, up to 10M and tokens in BrowseComp-Plus-style workloads.

- RLMs outperform conventional long-term scaffolds in rigorous benchmarks: Across S-NIAH, BrowseComp-Plus, OOLONG and OOLONG Pair, the RLM variants of GPT-5 and Qwen3-Coder improve accuracy and F1 over direct model calls, retrieval agents like CodeAct, and summarization agents, while keeping the cost per query comparable or lower.

- REPL is the only variant that actually helps, iteration is important for quadratic functions: A reduction that only exposes the REPL without small repetitive calls still increases the performance of some operations, showing the value of context loading in the environment, but full RLMs are needed to achieve significant gains in data-dense settings like OOLONG Pairs.

- Prime Intellect uses RLMs with RLMEnv and INTELECT 3: The Prime Intellect team uses the RLM paradigm as RLMEnv, where the root LM manages a sandboxed Python REPL, calls tools through sub-LMs and writes the final result

answervariable, and reports consistent gains in DeepDive, math python, Oolong and word-for-word transcription environments with models such as INTELECT-3.

Check it out Paper and technical details. Also, feel free to follow us Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the power of Artificial Intelligence for the benefit of society. His latest endeavor is the launch of Artificial Intelligence Media Platform, Marktechpost, which stands out for its extensive coverage of machine learning and deep learning stories that sound technically sound and easily understood by a wide audience. The platform boasts of more than 2 million monthly views, which shows its popularity among viewers.