Google AI Releases MedGemma-1.5: Latest Update to Its Open Medical AI Models for Developers

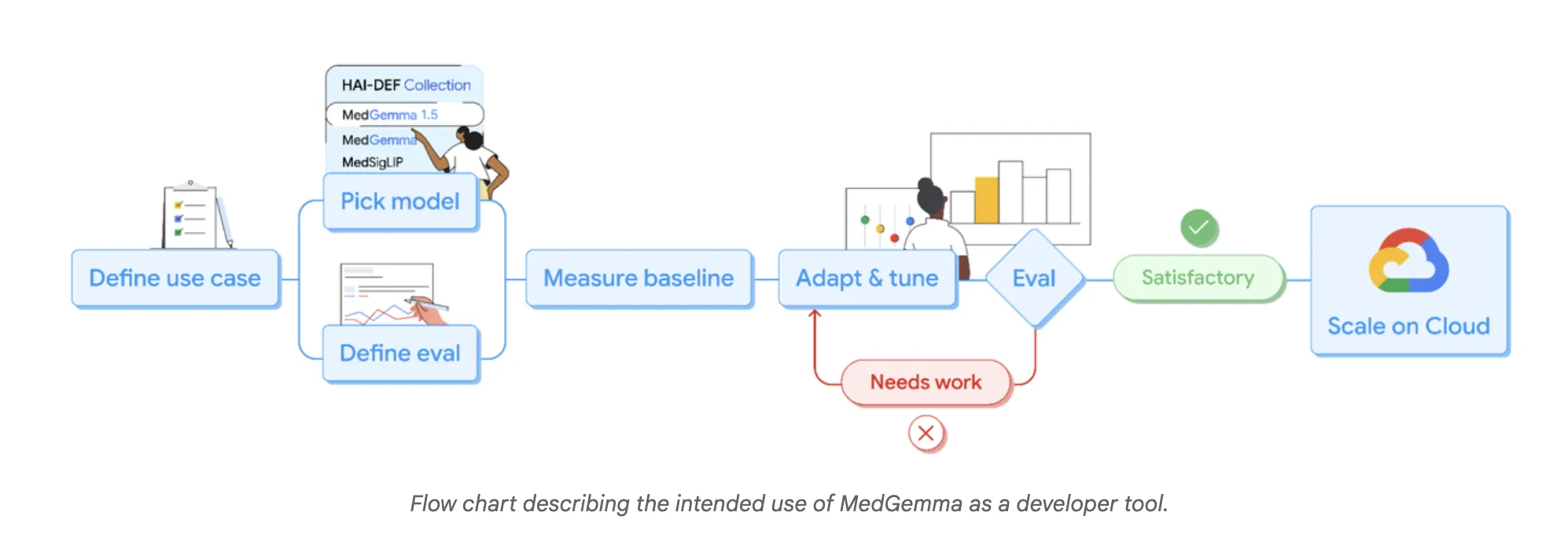

Google Research has expanded its Health AI Developer Foundations (HAI-DEF) program with the release of MedGemma-1.5. The model is released as an open source starting point for developers who want to create medical imaging, documentation and speech systems and adapt them to local workflows and regulations.

MedGemma 1.5, a small multimodal model for real clinical data

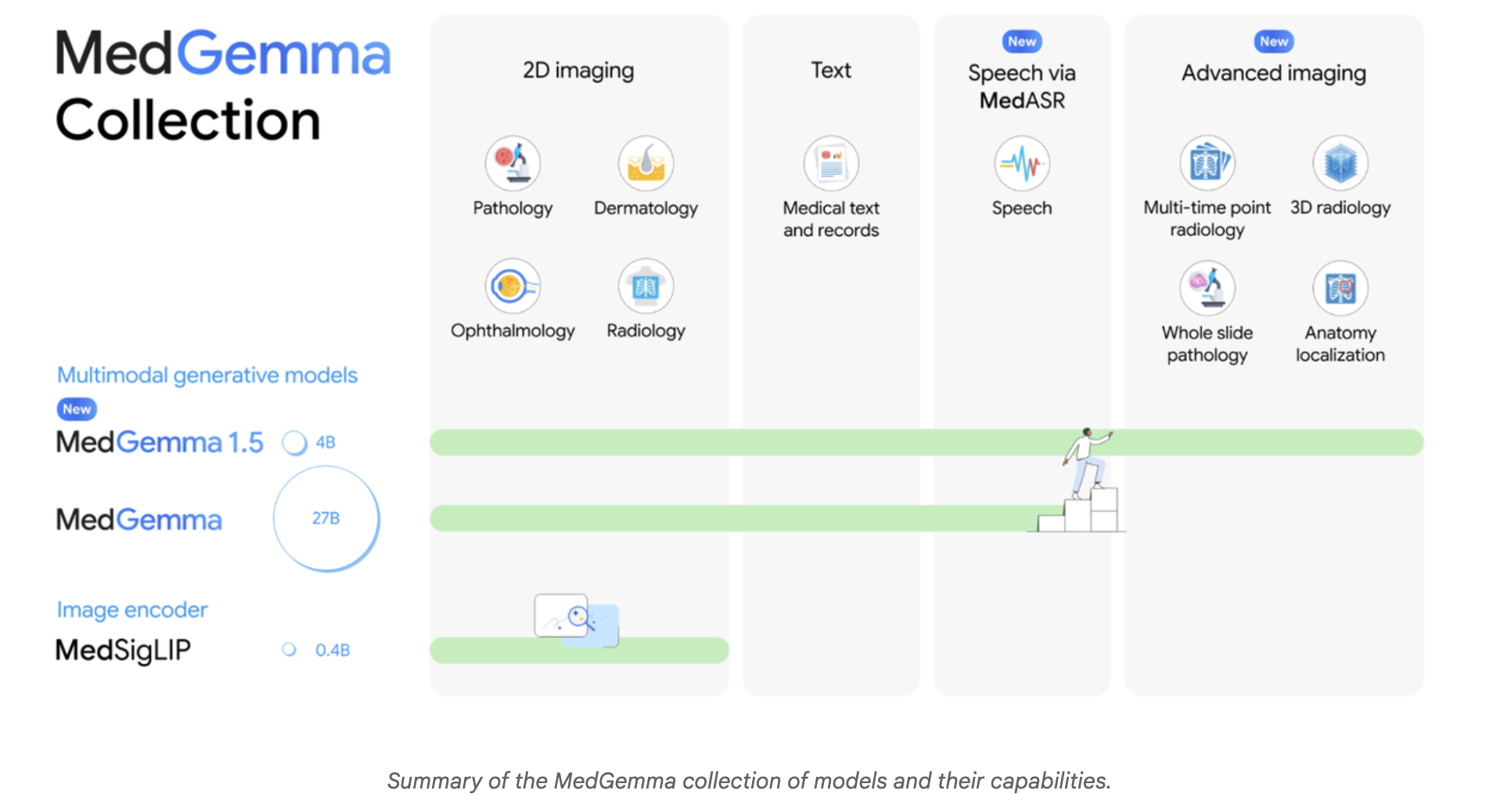

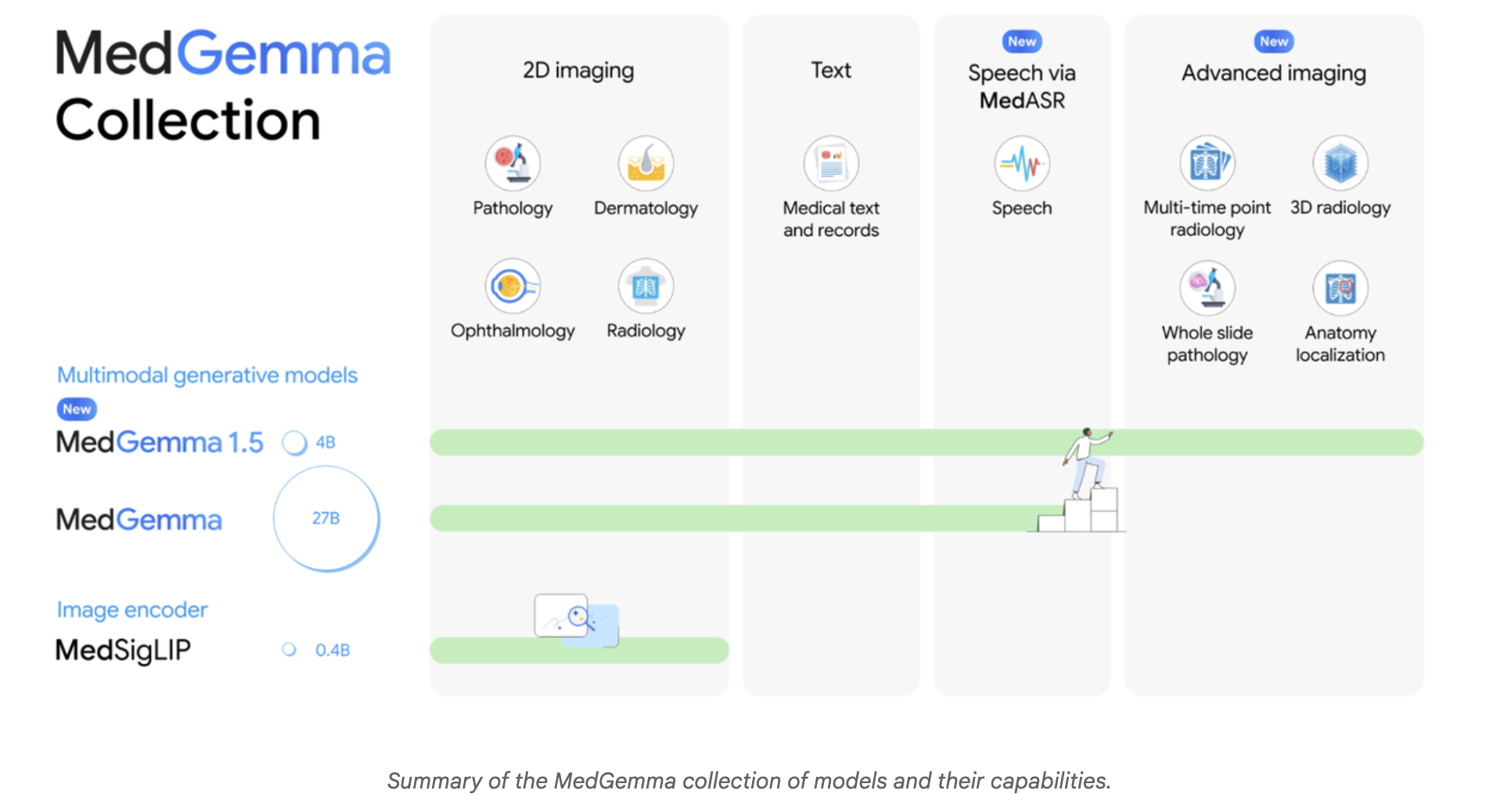

MedGemma is a family of medical productivity models built on Gemma. The new release, MedGemma-1.5-4B, is aimed at developers who need a compact model that can still handle real clinical data. The previous MedGemma-1-27B model is still available for more demanding textual use cases.

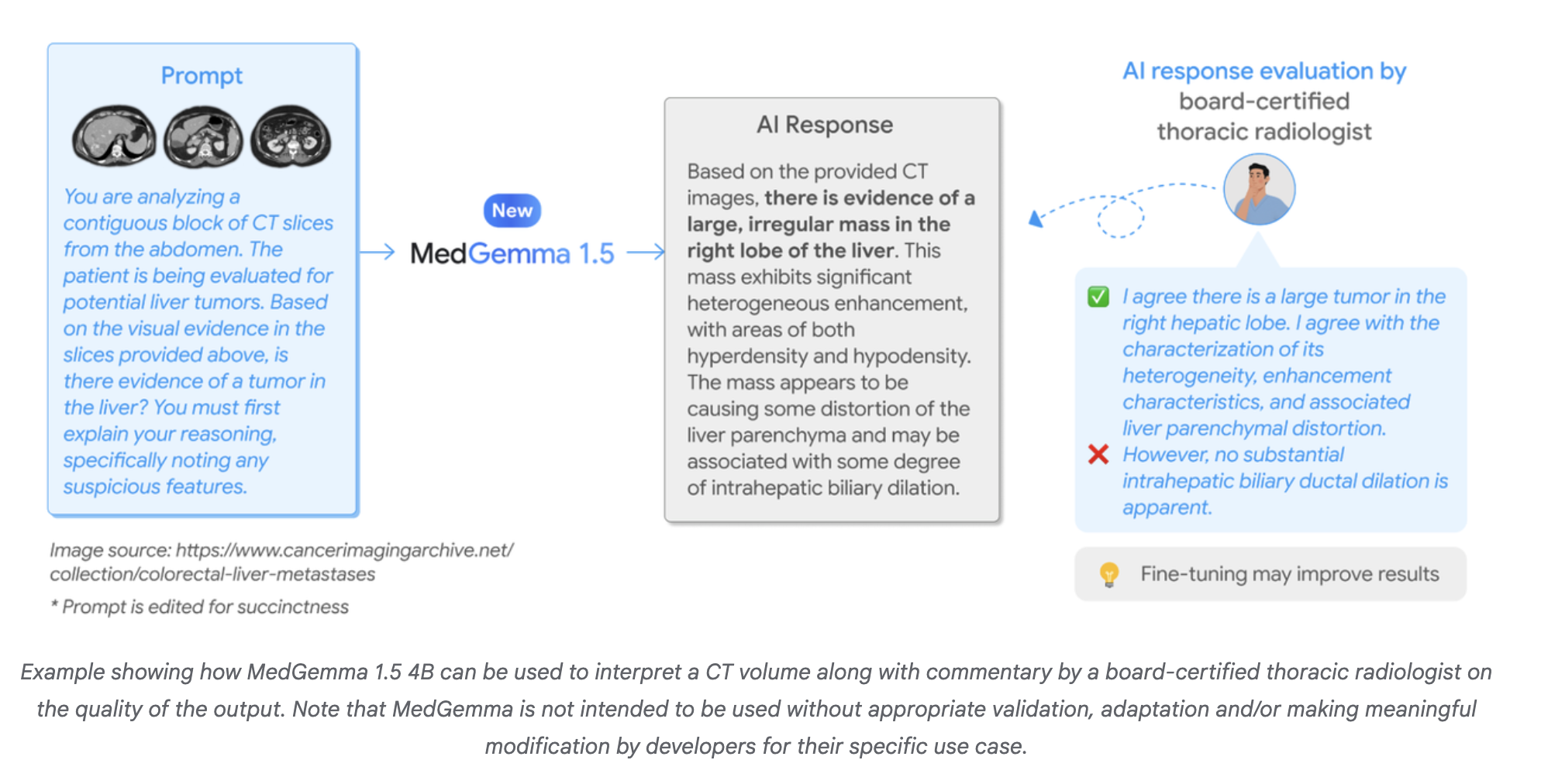

MedGemma-1.5-4B is multimodal. It accepts text, two-dimensional images, high-resolution volumes and full-length slides. The model is part of the Health AI Developer Foundations program so it is intended as a foundation for fine-tuning, not a ready-made diagnostic device.

Support for advanced CT, MRI and pathology

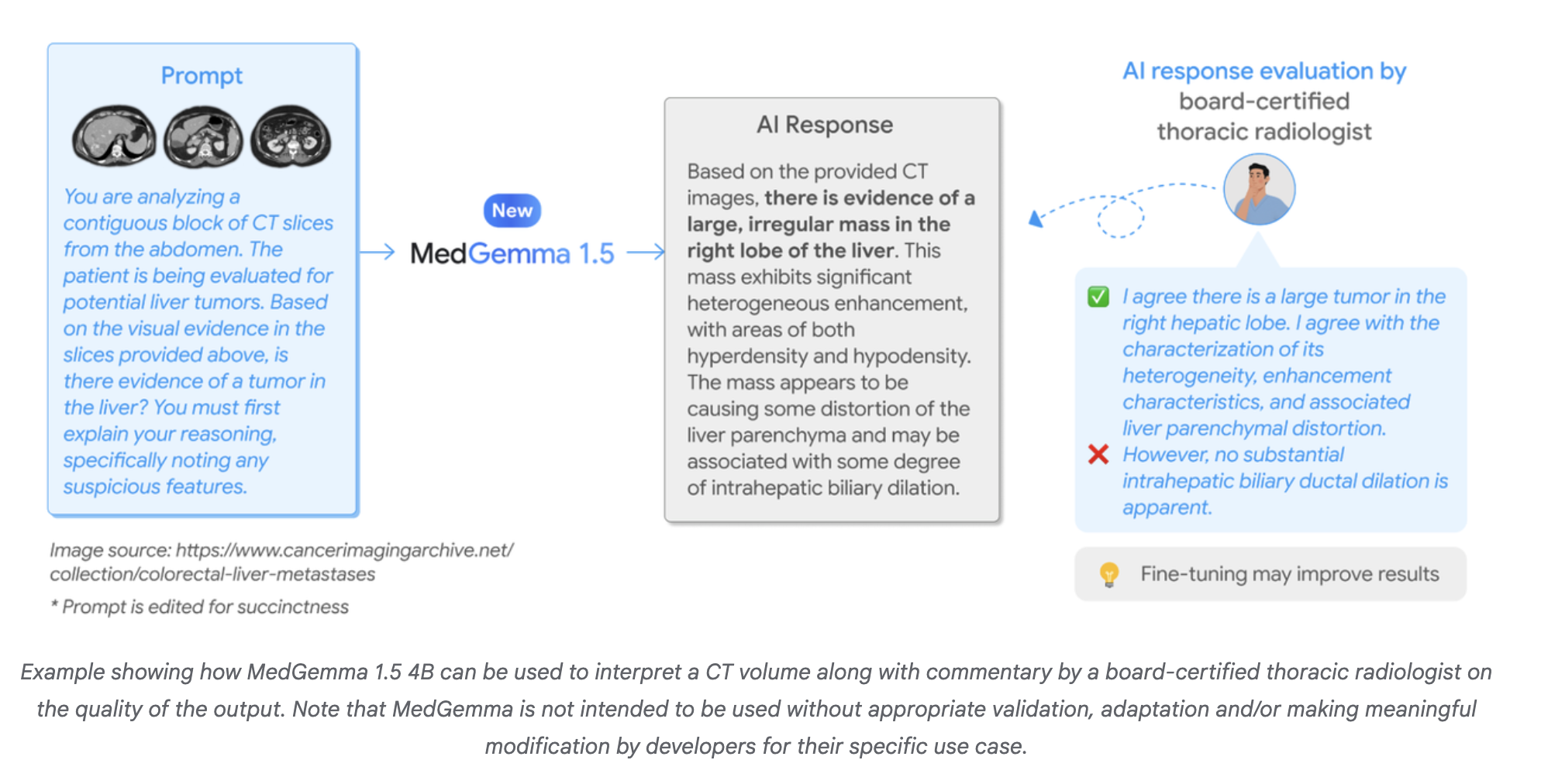

A major change in MedGemma-1.5 is support for high resolution images. The model can process three volumes of CT and MRI as sets of slices and natural language information. It can also process large histopathology slides by working over sections extracted from the slide.

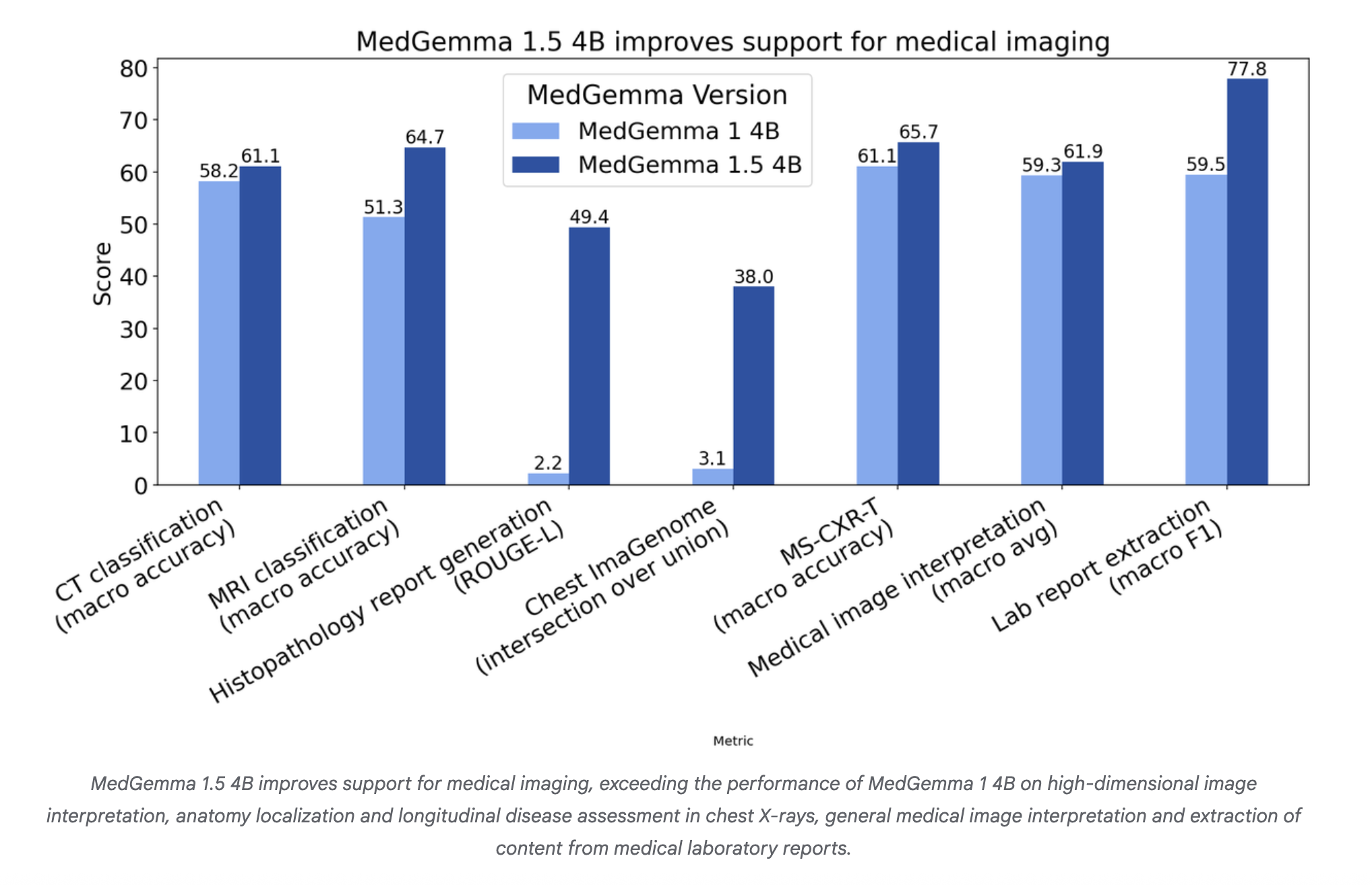

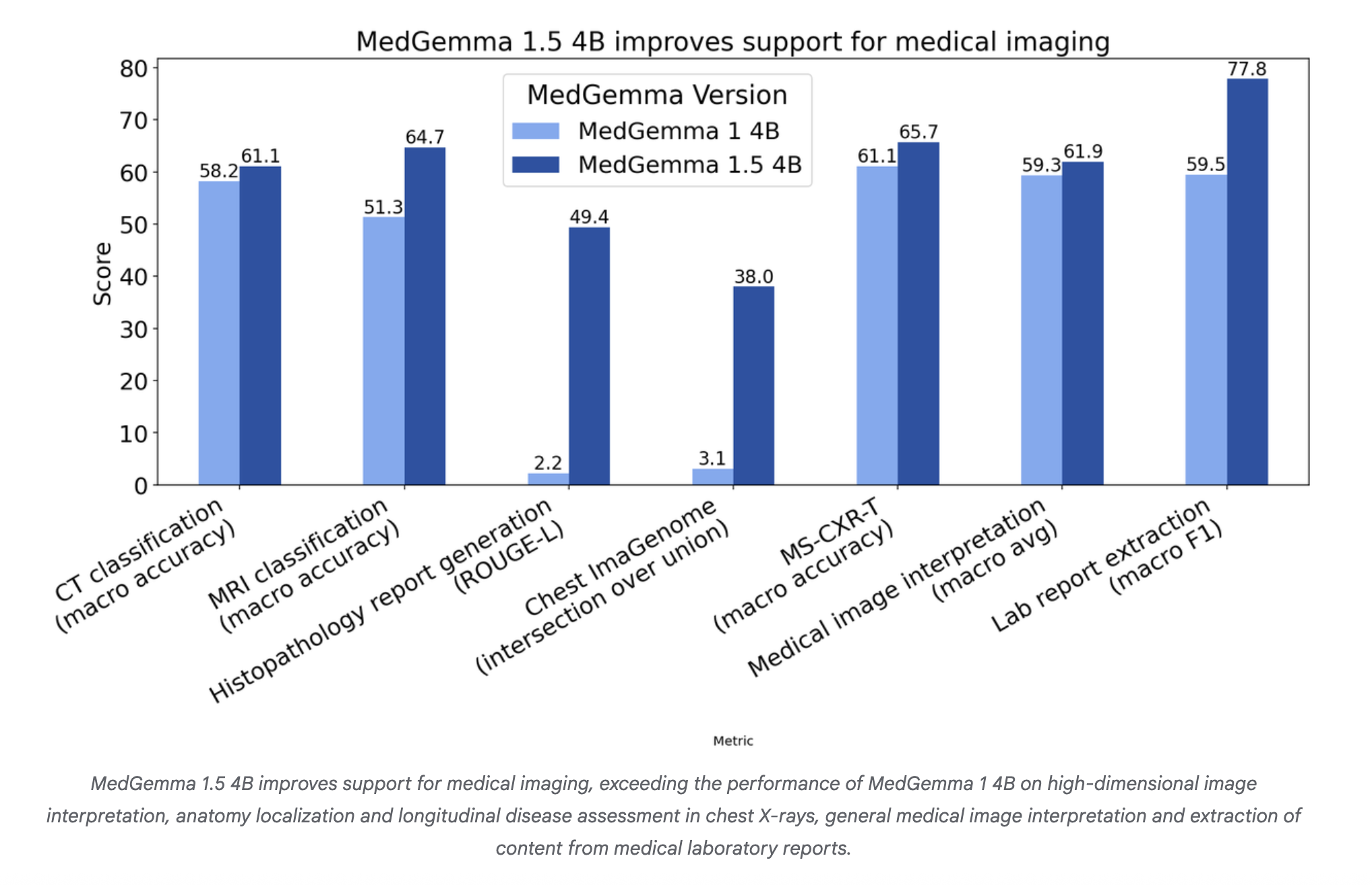

In internal benchmarks, MedGemma-1.5 improves disease-related CT findings from 58% to 61% accuracy and MRI disease detection from 51% to 65% accuracy when measured over findings. For histopathology, the ROUGE L score in single-slide cases increased from 0.02 to 0.49. This equates to a 0.498 ROUGE L score for a specific PolyPath model of activity.

Modeling and reporting release benchmarks

MedGemma-1.5 also improves several benchmarks around the manufacturing workflow.

In Chest ImaGenome’s benchmark for anatomical localization on chest X-rays, it improves union accuracy from 3% to 38%. In the MS-CXR-T benchmark for comparing longitudinal chest X-rays, maximum accuracy increases from 61% to 66%.

For all single-image benchmarks including chest radiography, dermatology, histopathology and ophthalmology, the average accuracy ranged from 59% to 62% t. These are simple one-shot functions, useful as sanity checks during domain adaptation.

MedGemma-1.5 also streamlines document generation. In the medical laboratory report, the model improves the macro F1 from 60% to 78% when removing the lab type, amount and units. For developers this means custom rules-based analysis of small, semi-structured PDF or text reports.

Applications installed on Google Cloud can now work directly with DICOM, which is a common file format used in radiology. This eliminates the need for a custom processor for most hospital systems.

Medical documentation visualization with MedQA and EHRQA

MedGemma-1.5 is not just a conceptual model. It also improves basic functionality in medical writing tasks.

In MedQA, a multiple choice benchmark for answering medical questions, the 4B model improves accuracy from 64% to 69% compared to the previous MedGemma-1. In EHRQA, a text-based health record query that answers the question, the accuracy increases from 68% to 90%.

These numbers matter if you plan to use MedGemma-1.5 as the backbone of tools such as chart summaries, guideline bases or retrieval of advanced generation in addition to clinical notes. The 4B size keeps fine tuning and feed costs at a realistic level.

MedASR, a tuned domain model for speech recognition

Clinical workflow contains a large amount of prescribed speech. MedASR is a new medical automatic speech recognition model released with MedGemma-1.5.

MedASR uses a Conformer-based Architecture that is pre-trained and fine-tuned for clinical audio. Supervises tasks such as ordering chest X-rays, radiology reports and general medical notes. The model is available through the same Health AI Developer Foundations channel on Vertex AI and Hugging Face.

In a cross-analysis with Whisper-large-v3, the standard ASR model, MedASR reduced the wording error rate in chest X-ray reporting from 12.5% to 5.2%. That equates to 58% fewer spelling errors. In an extensive internal benchmark for medical calls, MedASR achieves an error rate of 5.2% while Whisper-large-v3 has 28.2%, corresponding to fewer errors of 82%.

Key Takeaways

- MedGemma-1.5-4B is a multimodal integrated medical model that handles text, 2D images, 3D CT volume and MRI and pathology slides, released as part of the Health AI Developer Foundations program to adapt to local use cases.

- In imaging benchmarks, MedGemma-1.5 improves CT disease detection from 58% to 61%, MRI disease detection from 51% to 65%, and histopathology ROUGE-L from 0.02 to 0.49, consistent with the performance of the PolyPath model.

- In downstream clinical-style tasks, MedGemma-1.5 increases Chest ImaGenome cross-over union from 3% to 38%, MS-CXR-T maximum accuracy from 61%t to 66% and lab report extraction macro F1 from 60% to 78% while keeping the model size at 4B parameters.

- MedGemma-1.5 also strengthens text reasoning, increasing MedQA accuracy from 64% to 69% and EHRQA accuracy from 68% to 90%, making it ideal as a backbone for chart summarization and EHR query-answering systems.

- MedASR, a medical ASR model based on Conformer in the same system, reduces the word error rate in the chest X-ray call from 12.5% to 5.2% and the wide benchmark for medical calls from 28.2% to 5.2% compared to Whisper-large-v3, providing the end of the tuned speech of the MedGemma focused work domain.

Check out Model weights again Technical details. Also, feel free to follow us Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the power of Artificial Intelligence for the benefit of society. His latest endeavor is the launch of Artificial Intelligence Media Platform, Marktechpost, which stands out for its extensive coverage of machine learning and deep learning stories that sound technically sound and easily understood by a wide audience. The platform boasts of more than 2 million monthly views, which shows its popularity among viewers.