DeepSeek Researchers Introduce 1967 Matrix Normalization Algorithm to Correct Hyper-Correlation Instability

DeepSeek researchers are trying to solve the exact problem of training a large language model. Residual connectivity made very deep networks trainable, hyper-connectivity stretched that residual flow, and training became unstable at scale. A new mHC approach, Manifold Constrained Hyper Connections, preserves the rich topology of hyper-connections but locks the mixed behavior into a well-defined manifold so that signals remain numerically stable in very deep stacks.

From Residual Connections to Hyper Connections

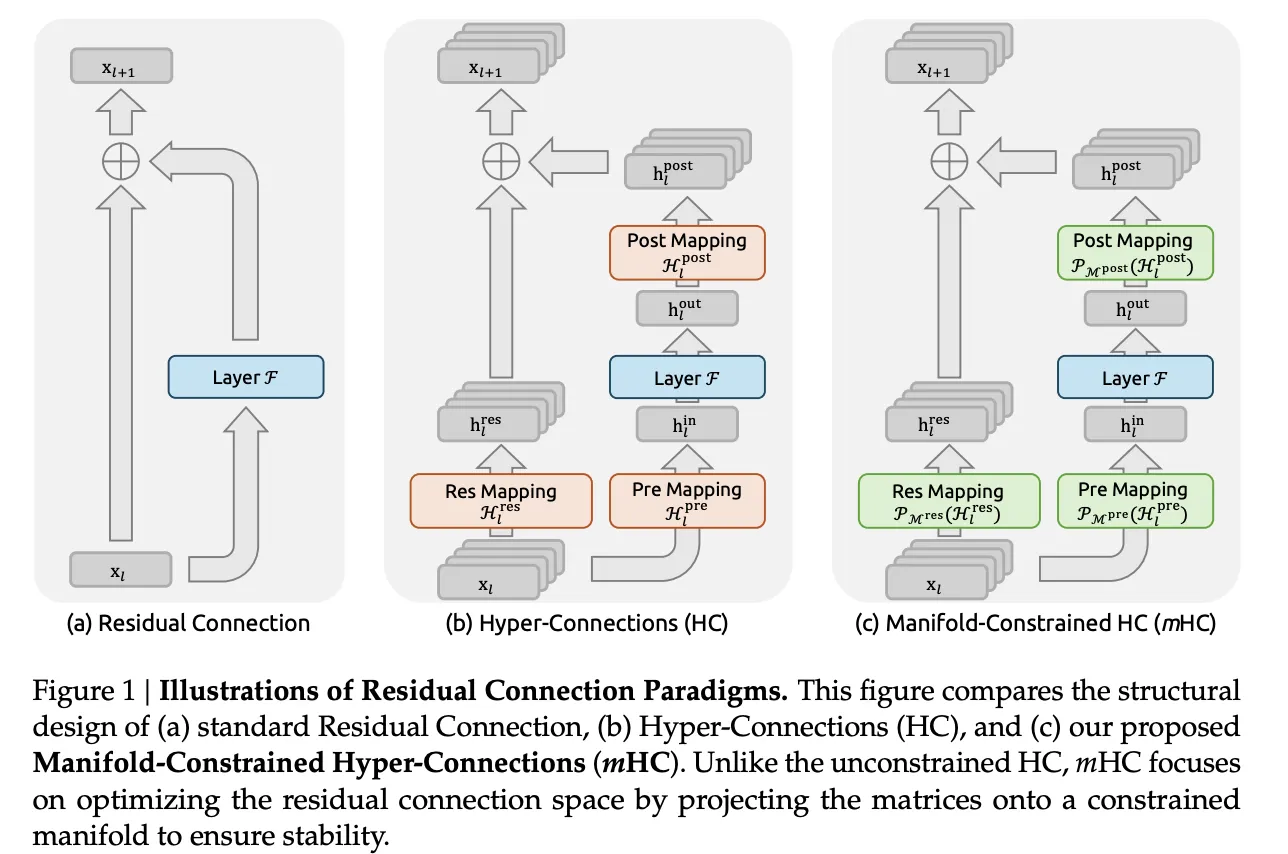

A typical residual connection, as in ResNets and Transformers, propagates activation through xl+1=xl+F(xl,Wl)

The proprietary method preserves dimensions and keeps gradients usable even when stacking multiple layers.

Hyper Connections includes this property. Instead of a single residual vector of size C, the model stores an n stream buffer 𝑥𝑙∈𝑅𝑛×𝐶. Three read maps control how each layer reads and writes to this buffer:

- Hlbefore selects a mixture of streams as layer input

- F general attention or feed-forward sub-layer

- Hlposted writes the results back to the n stream buffer

- HlresRn×n it connects streams between layers

The update has a form

xl+1=Hlresxl+Hlposted.⊤F(Hlbeforexl,Wl)

With un set to 4, this design maximizes scalability without a significant increase in floating-point cost, which is why hyper-linking improves downstream performance in language models.

Why Hyper Communication Is Not Strong

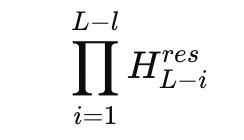

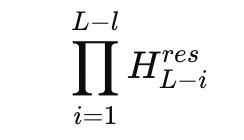

The problem arises when you look at the product of the remaining mixtures across multiple layers. In the 27B combination of model experts, DeepSeek studies the composition of the composite map

and defines the Amax Gain Magnitude based on the maximum row and column figures. This metric measures worst-case amplification in the forward and reverse signal paths. In the hyper-connection model, this gain reaches peaks of almost 3000, far from the ideal value of 1 that you expect from a stable residual path.

This means the smallest deviations of each layer are combined into the largest objects to increase the depth. Training logs show increased loss and unstable gradient trends relative to the baseline residual model. At the same time, maintaining a multicast buffer increases the memory traffic of each token, which makes unreasonable scaling of hyper-connections undesirable for generating large language models.

Hyper Coercive Multiple Connections

mHC preserves the residual vision of many streams but blocks the dangerous part. The remaining mixing matrix Hlres it no longer occupies the full n by n space. Instead, it is shown in double stochastic matrices, also called Birkhoff polytope. In that set all entries are non-negative and each row and each column sums to 1.

The DeepSeek team enforces this constraint with the classic Sinkhorn Knopp algorithm from 1967, which alternates row and column normalization to approximate a double stochastic matrix. The research team uses 20 iterations per layer during training, which is enough to keep the map close to most targets while keeping costs under control.

Under these constraints, Hlresxl behaves like a convex combination of residual streams. The total aspect ratio is maintained and normalization is performed in a robust manner, eliminating the explosive growth seen in hyper plain connections. The research team also parameterized the input and output maps so that the coefficients are non-negative, which avoids cancellation between streams and keeps the interpretation relatively clear.

With mHC the Amax Gain Magnitude combination remains limited and peaks at around 1.6 in the 27B model, compared to peaks close to 3000 in the unrestricted variant. That’s a reduction of 3 orders of magnitude in the worst-case amplification, and it comes from a straightforward mathematical manipulation rather than tuned tricks.

Systems Work and Training Overhead

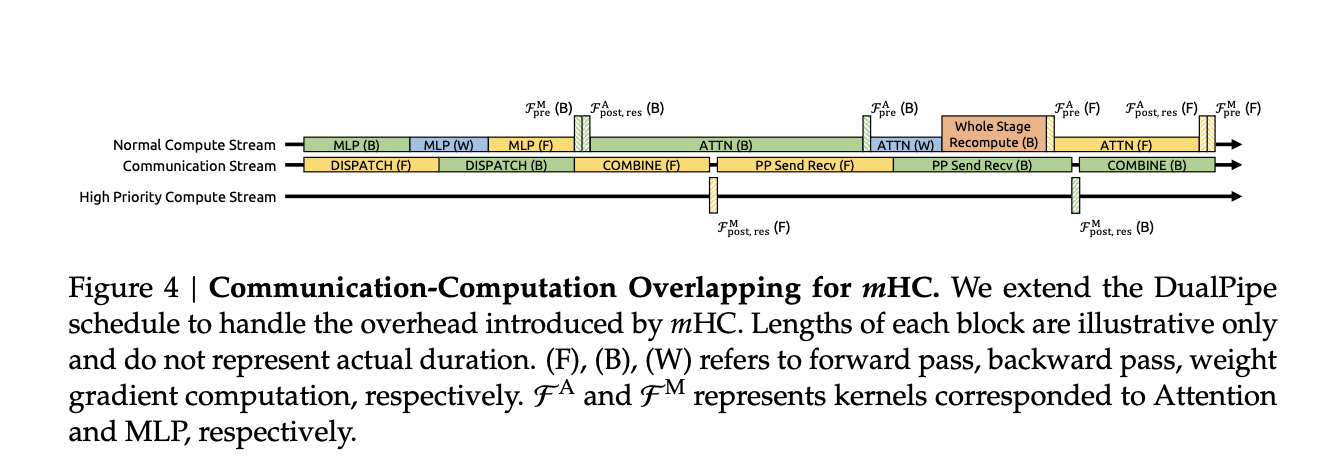

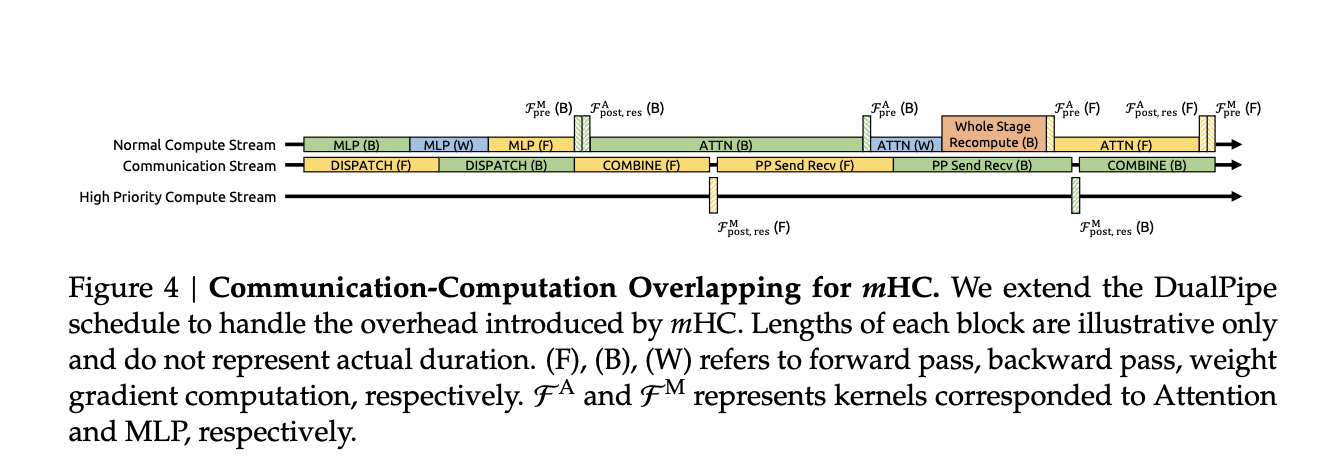

Forcing the rest of the mix through Sinkhorn-style repetition adds cost to the paper. The research team addresses this by selecting several systems:

- Integrated characters include RMSNorm, projection and mHC mapping gate to keep memory traffic low.

- Restore activation-based experiments to calculate memory by recalculating mHC activation during backprop of layer blocks

- Integration with DualPipe such as the pipeline schedule bypasses communication and recalculation, so that additional work does not block the training pipeline

On a large scale in the house training run, the mHC with expansion level n equal to 4 adds about 6.7 percent of the training time relative to the original structure. That price already includes both the additional computer from Sinkhorn Knopp and the infrastructure configuration.

Energy Effects

The research team trains a mixture of 3B, 9B and 27B expert models and tests them on standard language models, including tasks such as BBH, DROP, GSM8K, HellaSwag, MMLU, PIQA and TriviaQA.

In model 27B, the numbers reported in a subset of jobs show a clear pattern:

- Base: BBH 43.8, DROP F1 47.0

- For hyper connection: BBH 48.9, DROP 51.6

- For mHC: BBH 51.0, DROP 53.9

So the hyper connection already offers an advantage over the basic residual design, and the limited hyper connection squeezes more performance while restoring stability. The same trends appear in other benchmarks and across all model sizes, and the scaling curves suggest that the benefit continues across computing budgets and across the full training track rather than just convergence.

Key Takeaways

- mHC stabilizes the increased residual currents: mHC, Manifold Constrained Hyper Connections, extends the residual path to 4 connected streams like HC, but constrains the residual mixing matrices to double stochastic matrices, so that the long-range propagation remains regular instead of bursty.

- Explosive gain reduced from ≈3000 to ≈1.6: In the 27B MoE model, the Amax Gain Magnitude of residual composite map peaks reaches 3000 for unforced HC, while mHC keeps this metric bounded around 1.6, which removes the explosive residual streaming behavior that broke the previous training.

- Sinkhorn Knopp enforces double stochastic coupling: Each residual mixing matrix is represented by about 20 Sinkhorn Knopp iterations so that both rows and columns add up to 1, making the map a convex combination of permutations, which returns the same behavior identity while still allowing for rich cross-stream communication.

- Less training up front, measurable benefits downstream: For all the 3B, 9B and 27B DeepSeek MoE models, mHC improves the benchmark accuracy, for example by adding 2.1 percent to the BBH in the 27B model, while only adding about 6.7 percent of the training time by using the combined kernels, recomputes and pipeline information.

- We present a new measurement axis for the LLM design: Instead of measuring only parameters or context length, mHC shows that transparently designing the topology and various constraints of residual flows, for example residual width and structure, is a practical way to unlock better performance and stability in future large language models.

Check it out FULL PAPER here. Also, feel free to follow us Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the power of Artificial Intelligence for the benefit of society. His latest endeavor is the launch of Artificial Intelligence Media Platform, Marktechpost, which stands out for its extensive coverage of machine learning and deep learning stories that sound technically sound and easily understood by a wide audience. The platform boasts of more than 2 million monthly views, which shows its popularity among viewers.