Nous Research Releases NousCoder-14B: A Competitive Olympiad Programming Model After Training on Qwen3-14B with Reinforcement Learning

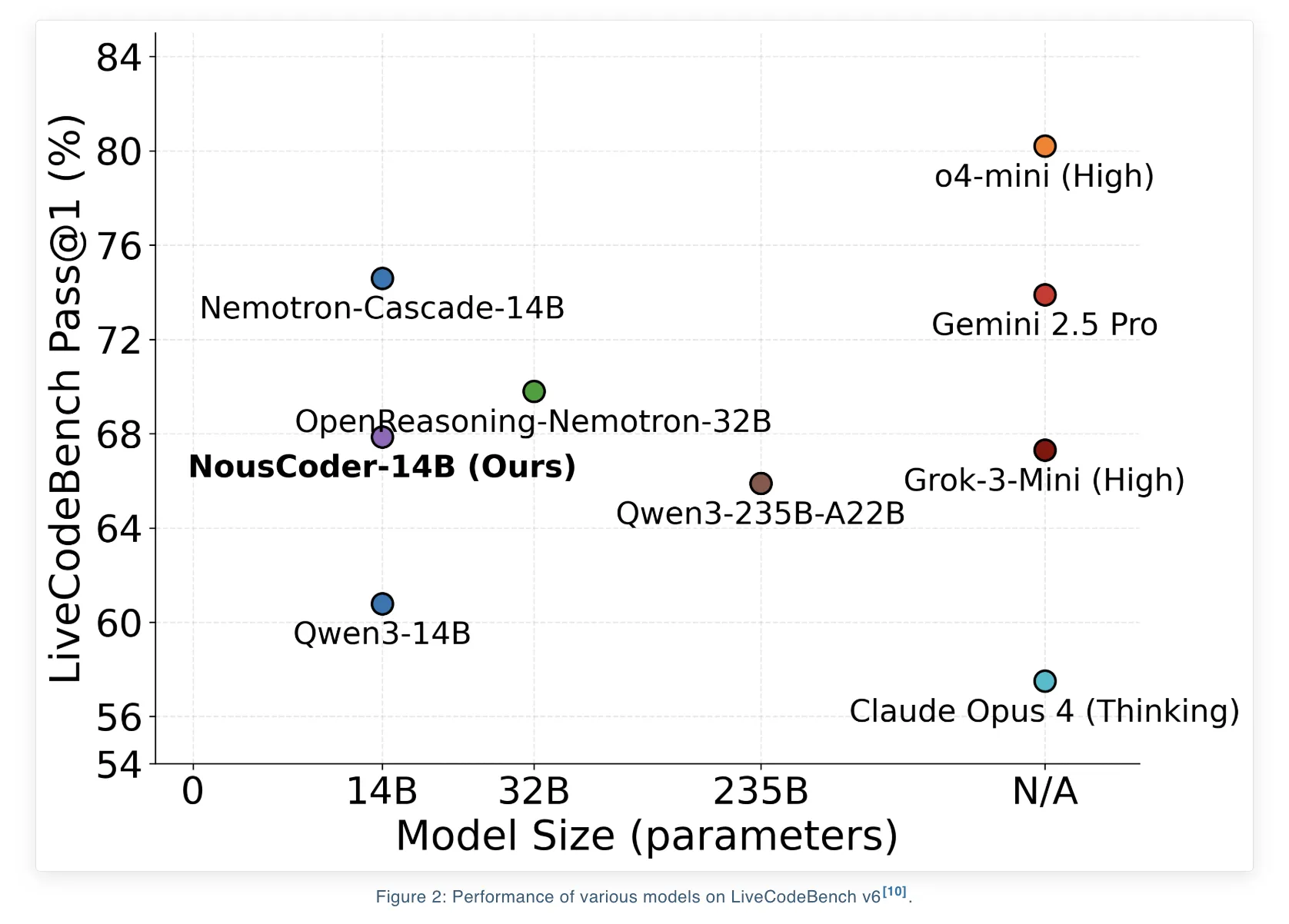

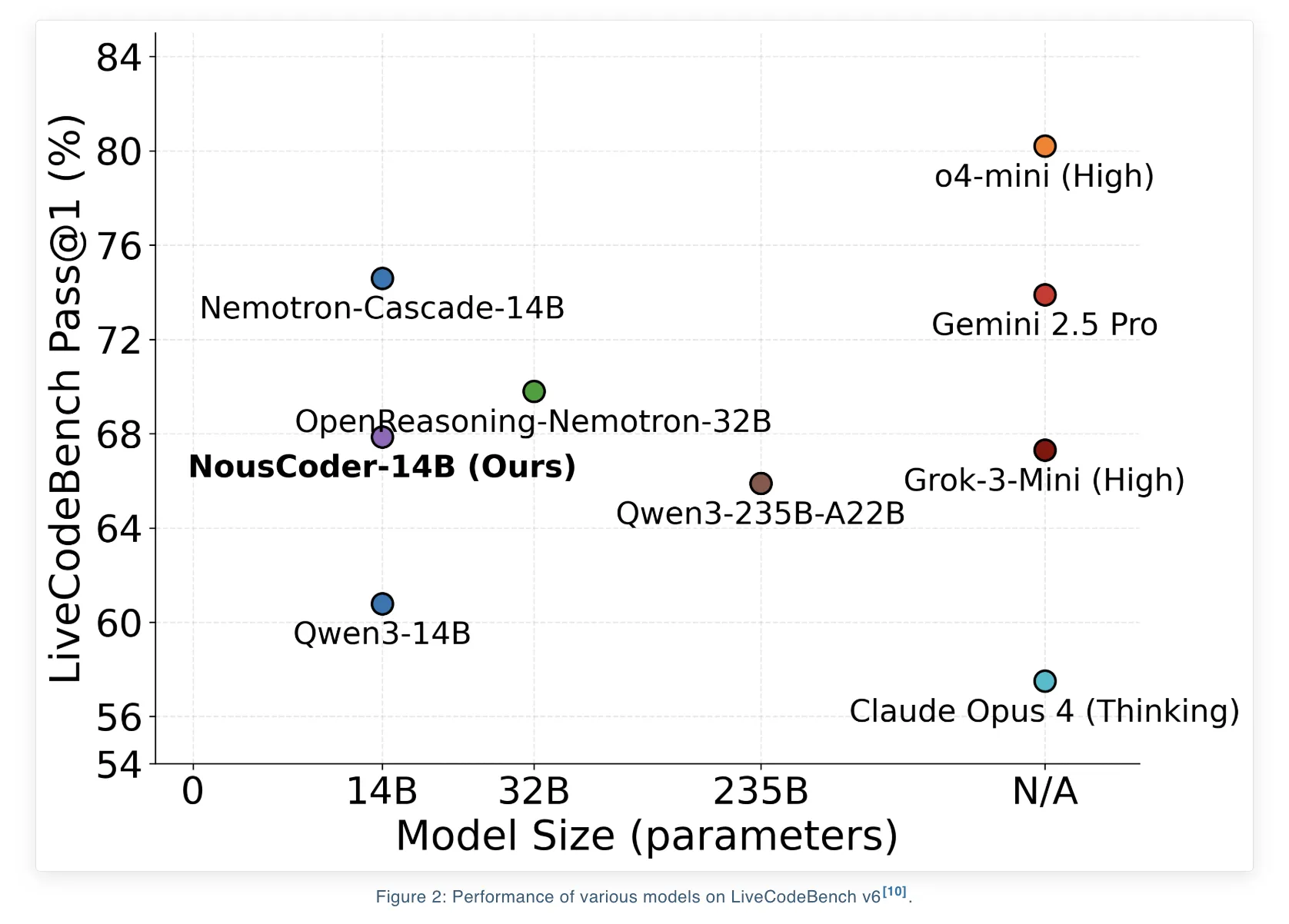

Nous Research has introduced NousCoder-14B, an olympiad competition model submitted to Qwen3-14B using reinforcement learning (RL) with guaranteed rewards. In the LiveCodeBench v6 benchmark, which covers problems from 08/01/2024 to 05/01/2025, the model reaches a Pass@1 accuracy of 67.87 percent. This is 7.08 percentage points higher than the Qwen3-14B baseline of 60.79 percentage points for the same measure. The research team trained the model on 24k code verification problems using 48 B200 GPUs in 4 days, and released the weights under the Apache 2.0 license from Hugging Face.

Benchmark focus and what Pass@1 means

LiveCodeBench v6 is designed for competitive system testing. The test classification used here consists of 454 problems. The training set uses the same recipe as the DeepCoder-14B project from Agentica and Together AI. Includes issues from TACO Verified, PrimeIntellect SYNTHETIC 1, and LiveCodeBench issues created before 07/31/2024.

The benchmark only covers tasks of the competing programming style. For each problem, the solution must respect strict time and memory constraints and must pass a large set of hidden output tests. Pass@1 is the subset of problems where the initialized program passes all tests, including time and memory.

Implementation-based RL dataset construction

All datasets used for training are combined with problems to generate verification codes. Each problem has a reference application and multiple test cases. The training set consists of 24k problems taken from:

- TACO Guaranteed

- PrimeIntellect SYNTHETIC 1

- LiveCodeBench issues coming before 07/31/2024

LiveCodeBench v6 test set, with 454 issues between 08/01/2024 and 05/01/2025.

Every problem is a complete competitive exercise with a definition, input format, output format, and test cases. This setup is important for RL because it provides a binary reward signal that is cheap to calculate once the code has started.

RL environment with Atropos and Modal

The RL environment is built using the Atropos framework. NousCoder-14B is invoked using the standard LiveCodeBench format, and generates Python code for each problem. Each release receives a scale reward that depends on the test results:

- Return 1 if the generated code passes all test cases for that problem

- Award −1 when the code gives an incorrect answer, exceeds the 15 second time limit, or exceeds the 4 GB memory limit in any test condition

To run untrusted code safely and at scale, the team uses Modal as an autoscaled sandbox. The system introduces one Modal container for each release in the main design that the research team describes as a deployed setting. Each container runs all test cases for that release. This avoids coupling the training computer with the validation computer and keeps the RL loop stable.

The research team also installs pipelines for detection and verification. When the projection worker completes a generation, it sends the completion to the Modal validator and immediately starts a new generation. With multiple inference operators and a fixed pool of Modal containers, this design keeps the training loop computer bound instead of validation bound.

The team discusses 3 verification matching techniques. They test one container per problem, one per release, and one per test case. Finally they avoid the preparation of each test case due to container overhead and use an approach where each container tests many test cases and focuses on a small set of the most difficult test cases first. If one of these fails, the system can stop the verification early.

GRPO, DAPO, GSPO, and GSPO+ objectives

NousCoder-14B uses Group Relative Policy Optimization (GRPO) which does not require a separate value model. Above the GRPO is a research team assessment 3 objectives: Dynamic Sampling Policy Optimization (DAPO), Group Sequence Policy Optimization (GSPO), and a modified GSPO variant called GSPO+.

All 3 objectives share the same definition of profit. The profit for each release is the reward for that release normalized to the mean and standard deviation of the rewards within the group. DAPO uses the importance of scaling and cutting at the token level, too we present three main changes related to GRPO:

- A high clip rule that increases the probability of small tokens

- The token ranking policy is a loss gradient that gives each token equal weight

- Dynamic sampling, in which good or bad groups are left out because they behave poorly

GSPO moves the importance weight to the sequence level. It defines a sequence importance measure that sums the values of tokens across the system. GSPO+ maintains sequence level correction, but frees up gradients so that tokens are scaled equally regardless of sequence length.

In LiveCodeBench v6, the difference between these targets is modest. With a core length of 81,920 tokens, DAPO reaches a Pass@1 of 67.87 percent while GSPO and GSPO+ reach 66.26 percent and 66.52 percent. At 40,960 tokens, all 3 goals meet at about 63 percent Pass@1.

An extension of recursive context and redundant filtering

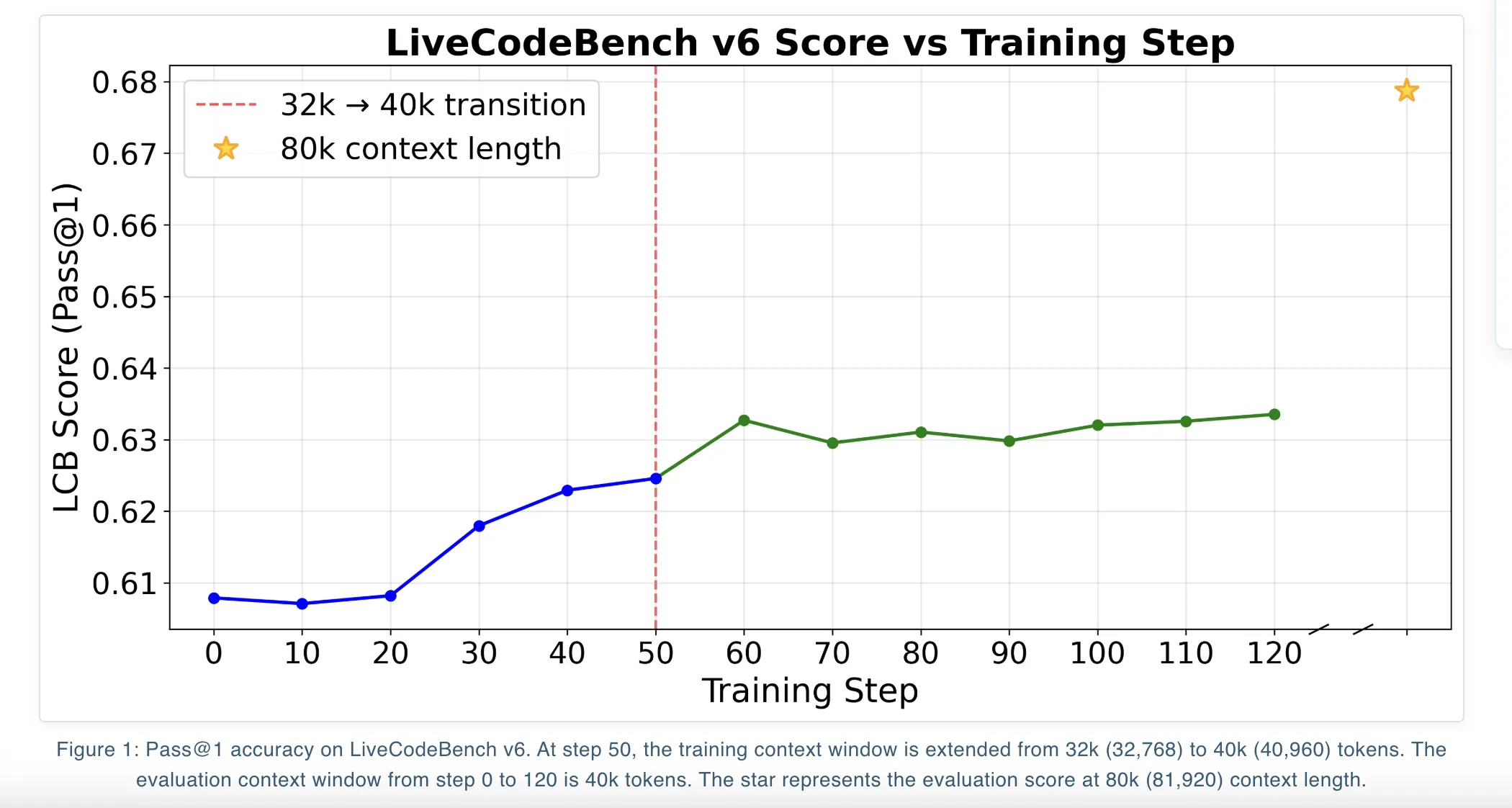

Qwen3-14B supports long context and training follows a repetitive context extension schedule. The team first trained the model with a 32k content window and then continued training on the higher Qwen3-14B content window of 40k. In each phase they select the benchmark with the best LiveCodeBench score in the 40k core and use the YaRN core extension during testing to reach 80k tokens, which is 81,920 tokens.

An important trick is to filter for a long time. If the generated program exceeds the maximum content window, it resets its gain to zero. This removes that emission from the gradient signal rather than penalizing it. The research team reports that this method avoids pushing the model to short solutions for optimization reasons only and helps to maintain quality when measuring the length of the context during testing.

Key Takeaways

- NousCoder 14B is a competitive model based on Qwen3-14B trained with RL based on usage, reaching 67.87 percent Pass@1 in LiveCodeBench v6, a gain of 7.08 percent over the base Qwen3-14B of 60.79 percent in the same benchmark.

- The model was trained on 24k code verification problems from TACO Verified, PrimeIntellect SYNTHETIC-1, and pre-07 31 2024 LiveCodeBench runs, and tested on the LiveCodeBench v6 test set of 454 problems from 08/01/20201/2024 to 02/2024.

- The RL setup uses Atropos, with Python solutions implemented in sandboxed containers, a simple reward of 1 for solving all test cases and an output of 1 for any failure or resource limit violation, and a pipelined design where inference and validation work equally well.

- Group Related Policy Development Objectives DAPO, GSPO, and GSPO+ are used for the long context code RL, all of which work with common group rewards, and show similar performance, with DAPO reaching Pass@1 ahead of the long context of 81,920 tokens.

- Training uses an iterative context extension, starting at 32k and then 40k tokens, and a YaRN-based extension during testing at 81,920 tokens, includes extreme output filtering for stability, and ships as a fully open stack with Apache 2.0 weights and RL pipeline code.

Check it out Model weights again Technical details. Also, feel free to follow us Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the power of Artificial Intelligence for the benefit of society. His latest endeavor is the launch of Artificial Intelligence Media Platform, Marktechpost, which stands out for its extensive coverage of machine learning and deep learning stories that sound technically sound and easily understood by a wide audience. The platform boasts of more than 2 million monthly views, which shows its popularity among viewers.