Zyphra Releases ZUNA: A Basic 380M-Parameter BCI Model for EEG Data, Improves Thought-to-Text Development

Brain-computer interfaces (BCIs) are finally having their ‘base model’ moment. Zyphra, a research lab specializing in large scale models, has just been released ZUNAa 380M parameter base model targeted specifically at EEG signals. ZUNA is an automated broadcast encoder designed to perform channel filling with high resolution on any electrode structure. This release includes weights under the Apache-2.0 license and the MNE-inference stack.

The Problem with ‘Brittle’ EEG Models

For decades, researchers have struggled with the ‘Wild West’ of EEG data. Different data sets use different numbers of channels and inconsistent electrode positions. Most deep learning models are trained on channel montages, making them fail when applied to new datasets or recording situations. Additionally, EEG measurements often suffer from noise from electrode changes or subject movement.

ZUNA’s 4D Architecture: Spatial Intelligence

ZUNA solves the generalizability problem by treating brain signals as location-based data. Instead of assuming a fixed grid, ZUNA injects a spatiotemporal structure with a 4D RoPE Encoding (4D RoPE).

The model converts the multichannel EEG into temporal windows of 0.125 seconds, or 32 samples. Each token is mapped to 4D coordinates: its 3D head position (x, y, z) and its coarse time reference

Distribution as a Productive Engine

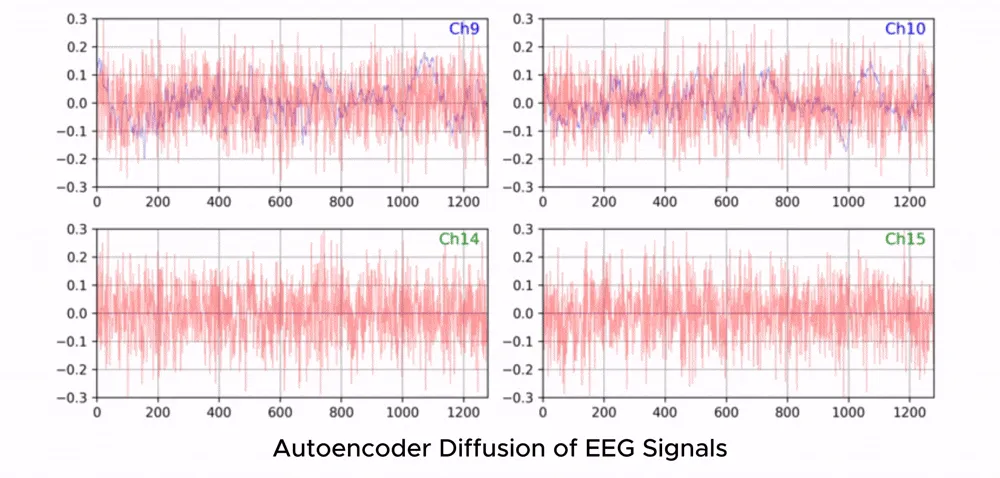

ZUNA uses a diffusion method because EEG signals are continuous and real-valued. The model associates a segmentation decoder that stores signal information in a latent bottleneck.

During the training, Zyphra used the main target to abandon the station. They randomly dropped 90% of the channels, replacing them with zero in the input code. The model was then tasked with reconstructing these ‘hidden’ signals from the information in the remaining 10% of the channels. This forced the model to learn the deep connections between different channels and the dynamic internal representation of brain activity.

Big Data Pipeline: 2 million hours

Data quality is the heartbeat of any foundational model. Zyphra compiled a synchronized corpus that includes 208 public datasets. This great collection includes:

- 2 million channel hours of EEG recording.

- It’s over 24 million non-overlapping samples for 5 seconds.

- The wide range of the station is calculated from the departure 2 to 256 for each recording.

The pre-processing pipeline averaged all signals to have a common sampling rate 256 Hz. Use them MNE-Python using high-pass filters at 0.5 Hz and a variable notch filter to remove line noise. The signals are then normalized by z-score to ensure zero-mean and unit variance while maintaining spatial structure.

Benchmarks: Killing a Spherical Spline

For years, the industry standard for filling in missing EEG data has been that spherical-spline interpolation. Although splines are useful for capturing the smoothness of a surface, they are not ‘pre-learned’ and fail if the gaps between the sensors become too large.

ZUNA consistently outperforms spherical-spline interpolation across multiple benchmarks, including the ANPHY-Sleep dataset and the BCI2000 vehicle image dataset. The employment gap is widening with high school dropout rates. At extremes of 90% dropout—basically 10x upsampling—ZUNA maintains high reconstruction fidelity while spline methods degrade significantly.

Key Takeaways

- Universal Generalization: ZUNA a 380M-parameter model that works with any EEG system, regardless of the number or location of electrodes. Unlike previous AI models that are limited to fixed layouts, it integrates diverse data sets and novel channel positions.

- 4D Spatiotemporal Intelligence: The model uses a 4D Rotary Positional Encoding (4D RoPE) a system for mapping brain signals in 3D space (x, y, z) and time

- Advanced Channel Reconstruction: By training as hidden autoencoderZUNA significantly outperforms traditional spherical-spline interpolation. It excels at ‘high resolution,’ maintaining high accuracy even when it arrives 90% brain signals are absent or impaired.

- Massive Training Scale: The model was trained on a synchronized chorus of 208 statisticsa total of approx 2 million channel hours again 24 million 5 second samples are different. This scale allows it to study deep cross-channel interactions that simple geometric patterns miss.

Check it out Paper, Technical Specifications, Repo and Model Weights. Also, feel free to follow us Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.