How to Build a Production-Ready Multi-Agency Incident Response System Using OpenAI Swarm and Advanced Agent Tools

In this tutorial, we build an advanced yet functional multi-agent system using OpenAI Swarm running on Colab. We show how to program special agents, such as a triage agent, an SRE agent, a communication agent, and a critic, to jointly handle a real-world production scenario. By orchestrating agent handoffs, integrating lightweight information retrieval and decision-level tools, and keeping the implementation clean and modular, we show how Swarm enables us to design controlled, agent-based workflows without heavy frameworks or complex infrastructure. Check it out FULL CODES HERE.

!pip -q install -U openai

!pip -q install -U "git+

import os

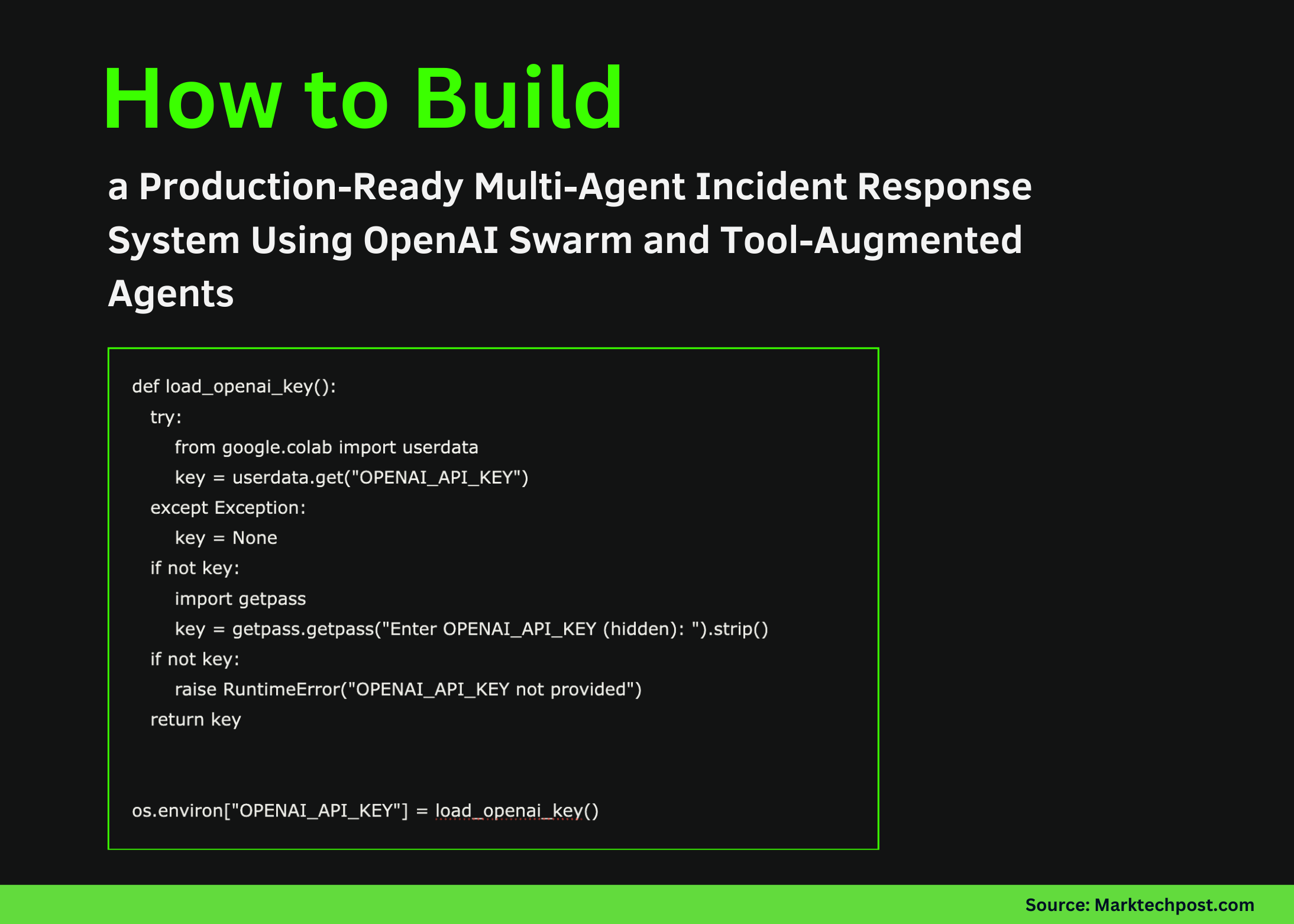

def load_openai_key():

try:

from google.colab import userdata

key = userdata.get("OPENAI_API_KEY")

except Exception:

key = None

if not key:

import getpass

key = getpass.getpass("Enter OPENAI_API_KEY (hidden): ").strip()

if not key:

raise RuntimeError("OPENAI_API_KEY not provided")

return key

os.environ["OPENAI_API_KEY"] = load_openai_key()We configure the environment and securely upload the OpenAI API key so that the notebook can run securely on Google Colab. We ensure that the key is fetched from Colab secrets if available and fall back to hidden information if not. This keeps authentication simple and reusable across sessions. Check it out FULL CODES HERE.

import json

import re

from typing import List, Dict

from swarm import Swarm, Agent

client = Swarm()We import the essential Python resources and run the Swarm client that orchestrates all agent interactions. These snippets establish the runtime backbone that allows agents to communicate with each other, assign tasks, and make tool calls. It serves as an entry point for multi-agent workflows. Check it out FULL CODES HERE.

KB_DOCS = [

{

"id": "kb-incident-001",

"title": "API Latency Incident Playbook",

"text": "If p95 latency spikes, validate deploys, dependencies, and error rates. Rollback, cache, rate-limit, scale. Compare p50 vs p99 and inspect upstream timeouts."

},

{

"id": "kb-risk-001",

"title": "Risk Communication Guidelines",

"text": "Updates must include impact, scope, mitigation, owner, and next update. Avoid blame and separate internal vs external messaging."

},

{

"id": "kb-ops-001",

"title": "On-call Handoff Template",

"text": "Include summary, timeline, current status, mitigations, open questions, next actions, and owners."

},

]

def _normalize(s: str) -> List[str]:

return re.sub(r"[^a-z0-9s]", " ", s.lower()).split()

def search_kb(query: str, top_k: int = 3) -> str:

q = set(_normalize(query))

scored = []

for d in KB_DOCS:

score = len(q.intersection(set(_normalize(d["title"] + " " + d["text"]))))

scored.append((score, d))

scored.sort(key=lambda x: x[0], reverse=True)

docs = [d for s, d in scored[:top_k] if s > 0] or [scored[0][1]]

return json.dumps(docs, indent=2)We define a lightweight internal knowledge base and use a retrieval function to reveal relevant context during the agent’s reasoning. Using simple token-based matching, we allow agents to focus their responses on predefined work documents. This shows how Swarm can be extended with domain-specific memory without external dependencies. Check it out FULL CODES HERE.

def estimate_mitigation_impact(options_json: str) -> str:

try:

options = json.loads(options_json)

except Exception as e:

return json.dumps({"error": str(e)})

ranking = []

for o in options:

conf = float(o.get("confidence", 0.5))

risk = o.get("risk", "medium")

penalty = {"low": 0.1, "medium": 0.25, "high": 0.45}.get(risk, 0.25)

ranking.append({

"option": o.get("option"),

"confidence": conf,

"risk": risk,

"score": round(conf - penalty, 3)

})

ranking.sort(key=lambda x: x["score"], reverse=True)

return json.dumps(ranking, indent=2)We present a systematic tool that evaluates and evaluates mitigation strategies based on confidence and risk. This allows agents to go beyond the free form of thought and make limited decisions. We show how tools can apply consensus and decision discipline to agent outcomes. Check it out FULL CODES HERE.

def handoff_to_sre():

return sre_agent

def handoff_to_comms():

return comms_agent

def handoff_to_handoff_writer():

return handoff_writer_agent

def handoff_to_critic():

return critic_agentWe define implicit handoff operations that enable one agent to transfer control to another. This snippet shows how we model delegation and creativity within Swarm. It makes the routing from the agent transparent and easy to extend. Check it out FULL CODES HERE.

triage_agent = Agent(

name="Triage",

model="gpt-4o-mini",

instructions="""

Decide which agent should handle the request.

Use SRE for incident response.

Use Comms for customer or executive messaging.

Use HandoffWriter for on-call notes.

Use Critic for review or improvement.

""",

functions=[search_kb, handoff_to_sre, handoff_to_comms, handoff_to_handoff_writer, handoff_to_critic]

)

sre_agent = Agent(

name="SRE",

model="gpt-4o-mini",

instructions="""

Produce a structured incident response with triage steps,

ranked mitigations, ranked hypotheses, and a 30-minute plan.

""",

functions=[search_kb, estimate_mitigation_impact]

)

comms_agent = Agent(

name="Comms",

model="gpt-4o-mini",

instructions="""

Produce an external customer update and an internal technical update.

""",

functions=[search_kb]

)

handoff_writer_agent = Agent(

name="HandoffWriter",

model="gpt-4o-mini",

instructions="""

Produce a clean on-call handoff document with standard headings.

""",

functions=[search_kb]

)

critic_agent = Agent(

name="Critic",

model="gpt-4o-mini",

instructions="""

Critique the previous answer, then produce a refined final version and a checklist.

"""

)

We prepare many special agents, each with a clear responsibility and set of instructions. By separating triage, incident response, communication, handwriting, and critique, we demonstrate a clean division of labor. Check it out FULL CODES HERE.

def run_pipeline(user_request: str):

messages = [{"role": "user", "content": user_request}]

r1 = client.run(agent=triage_agent, messages=messages, max_turns=8)

messages2 = r1.messages + [{"role": "user", "content": "Review and improve the last answer"}]

r2 = client.run(agent=critic_agent, messages=messages2, max_turns=4)

return r2.messages[-1]["content"]

request = """

Production p95 latency jumped from 250ms to 2.5s after a deploy.

Errors slightly increased, DB CPU stable, upstream timeouts rising.

Provide a 30-minute action plan and a customer update.

"""

print(run_pipeline(request))We put together a complete orchestration pipeline that uses triage, special reasoning, and priority progression. This snippet shows how we implement an end-to-end workflow with a single function call. It ties together all agents and tools in a coherent, production-style agent system.

In conclusion, we have established a clear pattern for designing agent-oriented systems with OpenAI Swarm that emphasizes transparency, separation of responsibilities, and iterative development. We’ve shown how to intelligently search for tasks, enrich the agent’s thinking with local tools, and improve output quality with a critical loop, all while maintaining a simple, Colab-compatible setup. This approach allows us to scale from testing to real use cases, making Swarm a powerful foundation for building reliable, production-grade AI workflows.

Check it out FULL CODES HERE. Also, feel free to follow us Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the power of Artificial Intelligence for the benefit of society. His latest endeavor is the launch of Artificial Intelligence Media Platform, Marktechpost, which stands out for its extensive coverage of machine learning and deep learning stories that sound technically sound and easily understood by a wide audience. The platform boasts of more than 2 million monthly views, which shows its popularity among viewers.