How This Agentic Memory Study Compares Long- and Short-Term Memory for LLM Agents

How to design an LLM agent that determines itself what to keep in long-term memory, what to keep in short-term context and what to discard, without hand-tuned heuristics or additional controls? Can a single policy learn to handle both types of memory with the same action space as text generation?

Researchers from Alibaba Group and Wuhan University present Agent Memory, or AgeMemframework that allows large language models to learn to manage both long-term and short-term memory as part of a single policy. Instead of relying on handwritten rules or external controls, the agent decides when to store, retrieve, summarize and forget, using memory tools integrated into the model’s action space.

Why current LLM agents have memory problems

Most agent frameworks treat memory as two loosely coupled systems.

Long-term memory stores user profiles, activity information and previous interactions across sessions. Short-term memory is a window to the current context, which holds active conversation and retrieved documents.

Existing systems design these two components separately. Long-term memory is managed through external stores as vector databases with simple add and get triggers. Short-term memory is managed with enhanced generation retrieval, sliding windows or summary schedules.

This classification creates several problems.

- Long-term and short-term memory are developed independently. Their collaboration is ultimately unprofessional.

- Heuristics decide when to write from memory and when to summarize. These rules are simplistic and miss rare but important events.

- Additional controls or specialist models increase the cost and complexity of the system.

AgeMem removes the external controller and wraps memory operations into the agent policy itself.

Memory as tools in the space of agent action

In AgeMem, memory functions are displayed as tools. At each step, the model can issue regular text tokens or a tool call. The framework describes 6 tools.

With long-term memory:

ADDstores a new memory object with content and metadata.UPDATEmodifies existing memory entries.DELETEremoves outdated or low value items.

In short-term memory:

RETRIEVEperform a semantic search in long-term memory and inject the returned items into the current context.SUMMARYit compresses the spaces of conversation into short summaries.FILTERit removes parts of the context that are not useful for future thinking.

The interaction protocol has a structured format. Each step begins with a

Three stages of learning to strengthen consolidated memory

AgeMem is trained in reinforcement learning to pair long-term and short-term memory behaviors.

Situation in time t it includes the current conversational context, long-term memory store and task specification. A policy selects a token or tool call as an action. The training trajectory of each sample is divided into 3 stages:

- Stage 1, long-term memory formation: The agent participates in a common environment and looks for information that will be useful in the future. It uses

ADD,UPDATEagainDELETEbuilding and maintaining long-term memory. The short-term total increases naturally during this phase. - Stage 2, short-term memory control under distractions: The short term total is reset. Long-term memory is persistent. The agent now finds related but unnecessary bug content. It should manage the temporary memory it uses

SUMMARYagainFILTERkeeping useful content and removing noise. - Section 3, integrated reasoning: The last question comes. The agent retrieves from long-term memory it uses

RETRIEVEit controls the temporal context, and produces feedback.

An important detail is that long-term memory persists across stages while short-term memory is erased between Stage 1 and Stage 2. This design forces the model to rely on retrieval rather than residual context and exposes a realistic long-horizon dependency.

Reward design and GRPO smart move

AgeMem uses an intelligent variant of Group Relative Policy Optimization (GRPO). For each task, the system samples multiple trajectories that make up the group. The terminal reward is calculated for each trajectory, then normalized within the group to obtain the profit signal. This gain is propagated to all steps in the trajectory so that the selection of intermediate tools is trained using the final result.

The total reward has three main components:

- Award for work that receives a response quality between 0 and 1 using the LLM judge.

- Context score measures the quality of short-term memory performance, including compression, early summarization and preservation of content related to the query.

- A memory score that measures the quality of long-term memory, including the proportion of high-quality items stored, the ease of processing tasks and the relevance of items retrieved from a question.

Equal weights are used for these three components so that each one contributes equally to the reading signal. A penalty time is added when the agent exceeds the allowed length of the conversation or when the context overflows the limit.

Experimental setup and main results

The research team fine-tunes AgeMem for HotpotQA training classification and tests with 5 benchmarks:

- ALFWorld for text-based interactive activities.

- SciWorld for science themed areas.

- BabyAI for the following instructions.

- PDDL programming functions.

- HotpotQA for answering many hop questions.

Metrics include the success rate of ALFWorld, SciWorld and BabyAI, the progress rate of PDDL activities, and the HotpotQA LLM judge score. They also define a Memory Quality metric using the LLM checker that compares stored memories with HotpotQA support facts.

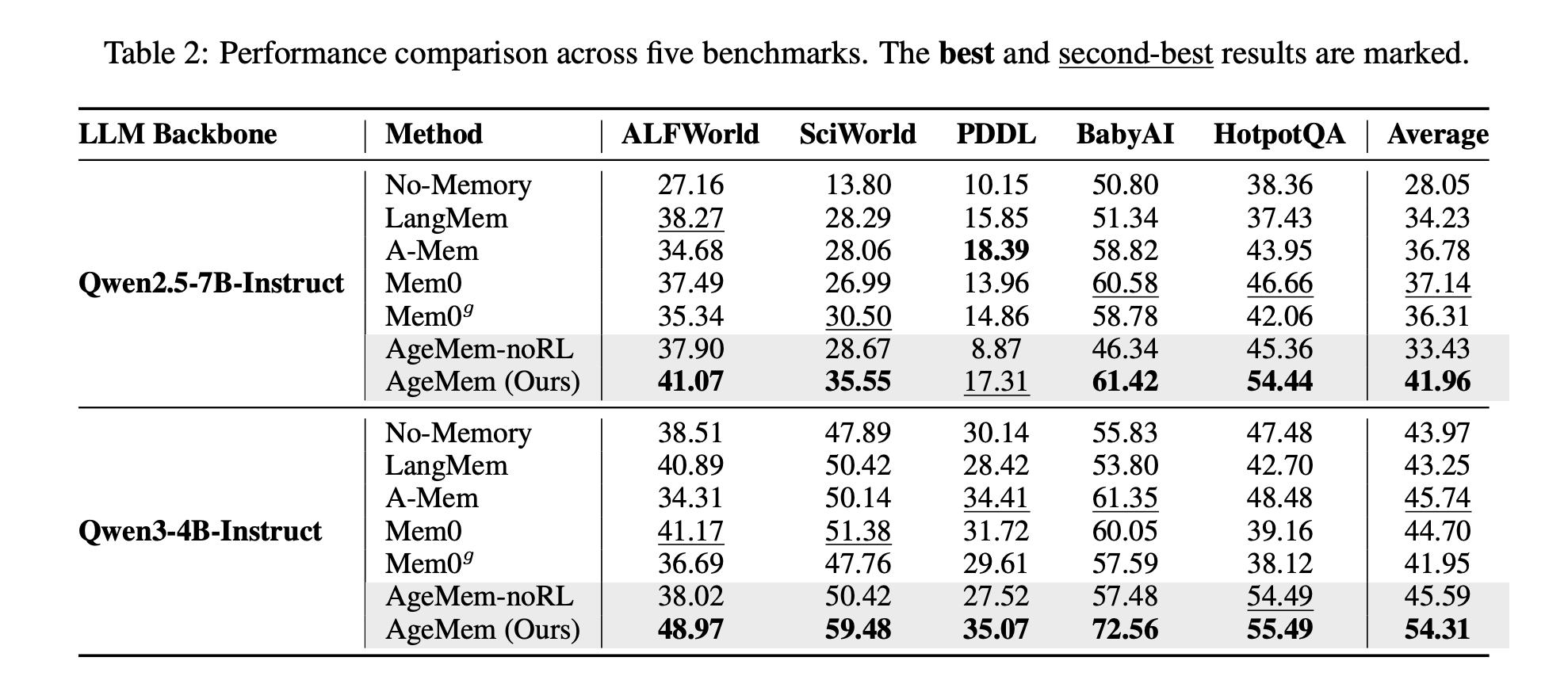

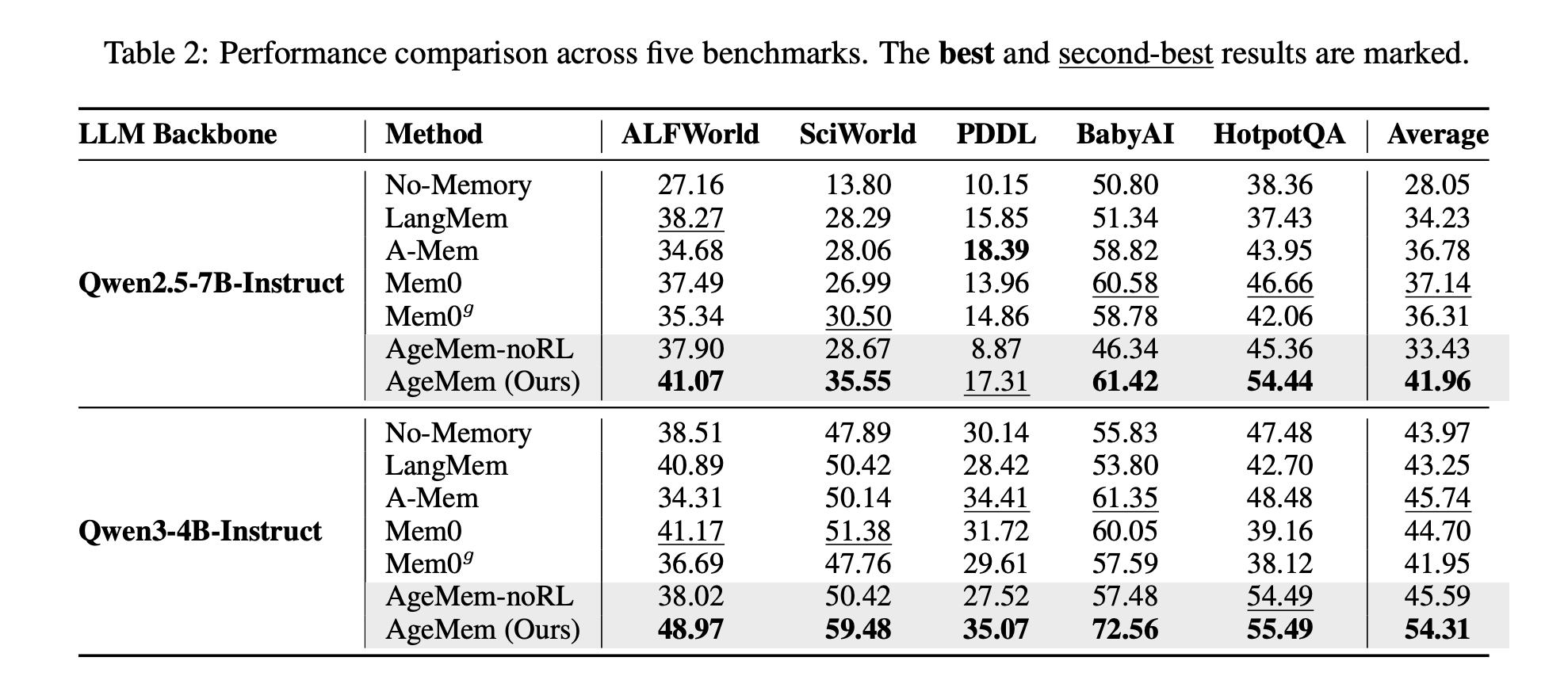

The basic lines include LangMem, Mem, Mem0, Mem0g and the memoryless agent. The core is Qwen2.5-7B-Instruct and Qwen3-4B-Instruct.

In Qwen2.5-7B-Instruct, AgeMem achieves an average score of 41.96 across all 5 benchmarks, while the best baseline, Mem0, achieves 37.14. In Qwen3-4B-Instruct, AgeMem reaches 54.31, compared to 45.74 for the best base, iA Mem.

Memory quality is also improving. In HotpotQA, AgeMem reaches 0.533 with Qwen2.5-7B and 0.605 with Qwen3-4B, the highest of all bases.

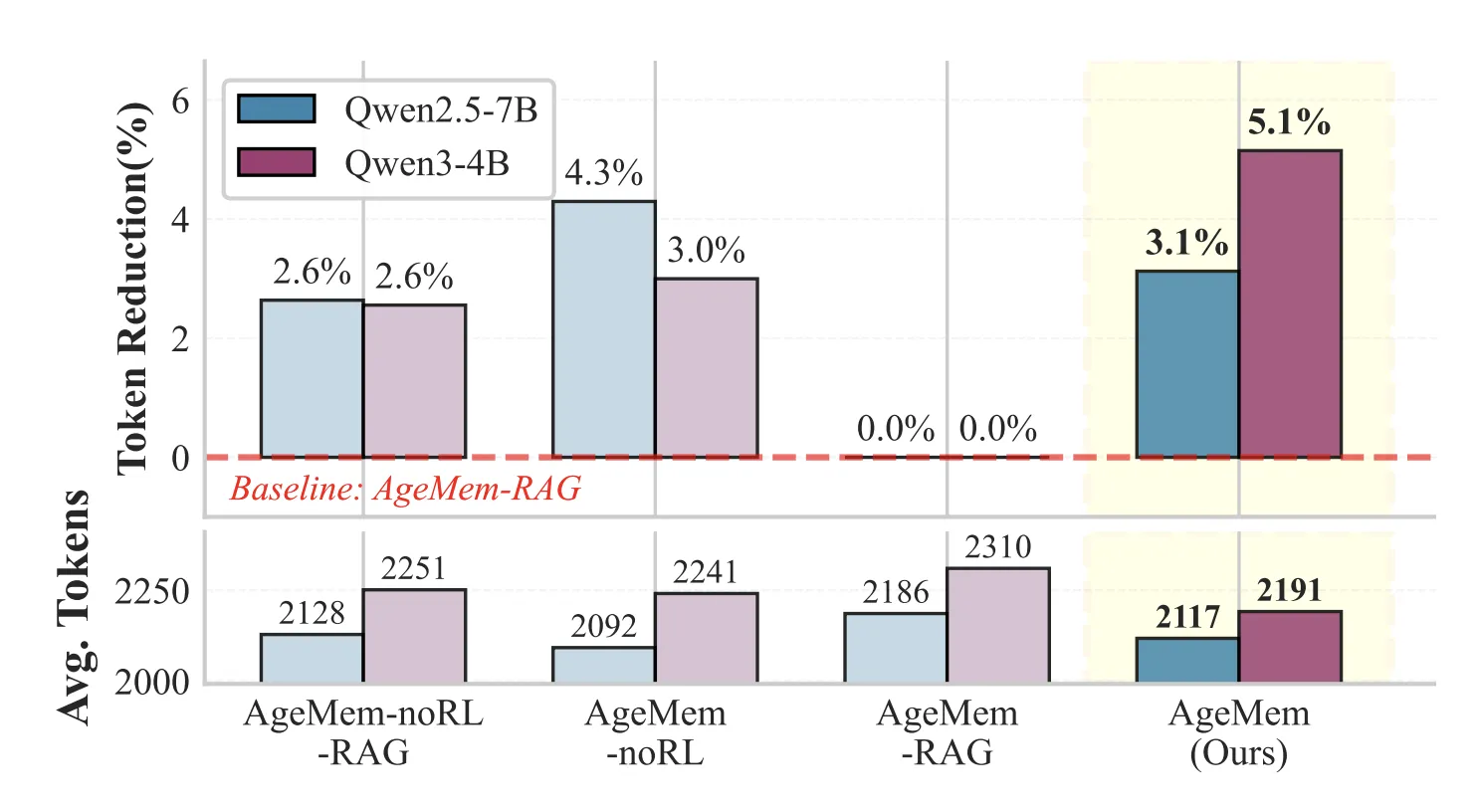

Short-term memory devices reduce duration while preserving performance. In HotpotQA, optimization with STM tools uses about 3 to 5 percent fewer tokens per input than variants that replace STM tools with a retrieval pipeline.

Ablation research confirms that each component is important. Adding only long-term memory devices on top of an already memory-free base offers clear advantages. Adding reinforcement learning to these tools improves scores further. The full system with long- and short-term tools and RL provides a 21.7 percent score improvement over the non-SciWorld recall baseline.

Implications for LLM agent design

AgeMem proposes a design pattern for future agent systems. Memory should be treated as part of a learned policy, not as two external subsystems. By turning storage, retrieval, summarizing and sorting into transparent tools and training them in conjunction with language production, the agent learns when to remember, when to forget and how to manage context efficiently across long horizons.

Key Takeaways

- AgeMem converts memory operations to transparent tools, so the same policy that executes a script also determines when it should be executed.

ADD,UPDATE,DELETE,RETRIEVE,SUMMARYagainFILTERmemory. - Long-term and short-term memory are jointly trained in a three-stage RL setup in which long-term memory is persisted across stages and short-term context is reset to enforce retrieval-based reasoning.

- The award function includes task accuracy, context management quality and long-term memory quality with equal weighting, with penalties for content overload and excessive conversation length.

- Across ALFWorld, SciWorld, BabyAI, PDDL and HotpotQA tasks, AgeMem on Qwen2.5-7B and Qwen3-4B consistently outperforms memory benchmarks such as LangMem, Mem and Mem0 in average scores and memory quality metrics.

- Short-term memory tools reduce information length by about 3 to 5 percent compared to a RAG-style baseline while maintaining or improving performance, indicating that learned summarization and filtering can replace manual context-handling rules.

Check it out FULL PAPER here. Also, feel free to follow us Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.

Check out our latest issue of ai2025.deva 2025-centric analytics platform that transforms model implementations, benchmarks, and ecosystem activity into structured datasets that you can sort, compare, and export.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the power of Artificial Intelligence for the benefit of society. His latest endeavor is the launch of Artificial Intelligence Media Platform, Marktechpost, which stands out for its extensive coverage of machine learning and deep learning stories that sound technically sound and easily understood by a wide audience. The platform boasts of more than 2 million monthly views, which shows its popularity among viewers.