NVIDIA Releases PersonaPlex-7B-v1: A Real-Time Speech-to-Speech Model Designed for Natural and Two-Way Conversations

NVIDIA researchers released the PersonaPlex-7B-v1, a full-duplex speech in conversation model that targets natural voice interaction and intuitive human control.

From ASR→LLM→TTS to one double model

Common voice assistants often run a cascade. Automatic Speech Recognition (ASR) converts speech to text, the language model generates text feedback, and Text to Speech (TTS) converts back to audio. Each stage adds delay, and the pipeline cannot handle skipped speech, environmental interference, or crowded background channels.

PersonaPlex replaces this stack with a single Transformer model that enables streaming speech understanding and speech processing on a single network. The model works with continuous neural coded noise and predicts both text tokens and audio tokens automatically. The incoming user’s voice is encoded incrementally, while PersonaPlex simultaneously reproduces its own speech, allowing for ingress, overlap, fast turn, and background channels.

PersonaPlex operates in a dual-stream configuration. One stream tracks the user’s voice, the other stream tracks the agent’s speech and text. Both streams share the same model state, so the agent can continue to listen while talking and can adjust its response when the user interrupts. This design is directly inspired by Kyutai’s Moshi full duplex frame.

Hybrid prompting, voice control and role control

PersonaPlex uses two commands to define the identity of a conversation.

- Speech information is a sequence of sound tokens that includes voice features, speech style, and prosody.

- A written command describes the role, background, organizational information, and context of the situation.

Together, these cues constrain both the linguistic content and the acoustic behavior of the agent. In addition to this, the system information supports fields such as name, business name, agent name, and business information, with a budget of up to 200 tokens.

Architecture, Helium core and sound system

The PersonaPlex model has 7B parameters and follows the architecture of the Moshi network. The Mimi speech encoder that combines ConvNet and Transformer layers converts the waveform audio into discrete tokens. Temporal and depth transformers process multiple channels representing user audio, agent text, and agent audio. A Mimi speech recorder that also combines Transformer and ConvNet layers generates output audio tokens. Audio uses a 24 kHz sample rate for both input and output.

PersonaPlex is built on Moshi weights and uses Helium as the backbone of the language model. Helium provides semantic understanding and enables familiarization without supervised conversational situations. This is seen in the example of the ‘space emergency’, where the information about the failure of the main reactor in the Mars mission leads to the corresponding technical reasoning with the appropriate emotional tone, although this situation is not part of the training distribution.

A combination of training data, real interviews and role plays

The training has 1 stage and uses a combination of real and simulated interviews.

The actual conversations come from 7,303 calls, about 1,217 hours, in the Fisher English corpus. These conversations are also explained in notifications using GPT-OSS-120B. Instructions are written at different levels of granularity, from simple personal hints such as ‘Enjoys having a good conversation’ to longer descriptions including bio, location, and preferences. This corpus provides natural background channels, interruptions, pauses, and emotional patterns that are difficult to find in TTS alone.

Transactional data includes assistant and customer service roles. The NVIDIA team reports 39,322 customer service interviews, approximately 410 hours, and 105,410 customer service interviews, approximately 1,840 hours. Qwen3-32B and GPT-OSS-120B generate text, and Chatterbox TTS converts it to speech. With the helper’s interaction, the text information has been modified as ‘You are a wise and friendly teacher. Answer questions or give advice in a clear and engaging way.’ In customer service situations, information encodes the organization, role type, agent name, and formal business rules such as rates, hours, and limits.

This design allows PersonaPlex to separate natural conversational behavior, which comes mainly from Fisher, from the stickiness of the task and role situation, which comes mainly from artificial situations.

Testing on FullDuplexBench and ServiceDuplexBench

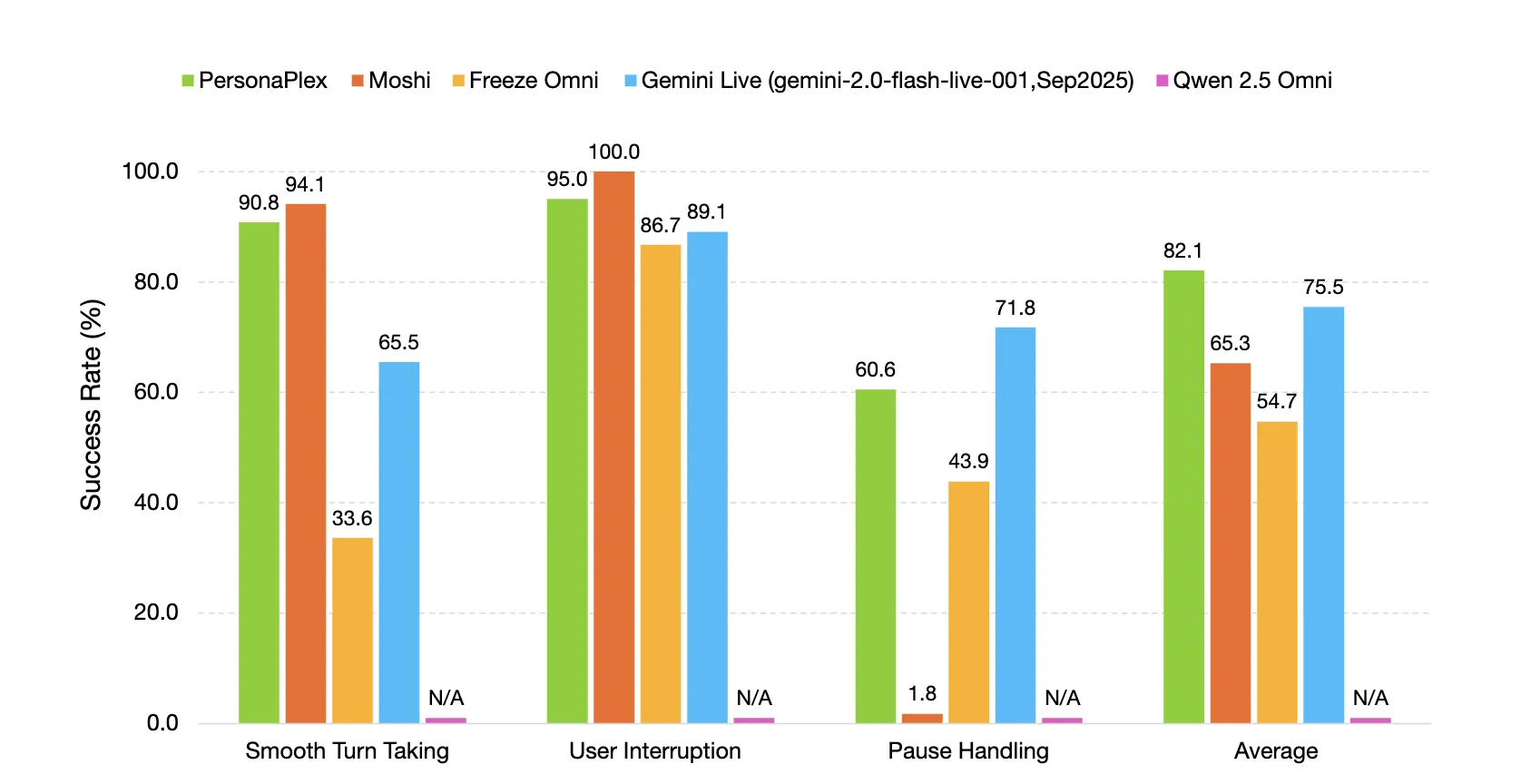

PersonaPlex is tested on FullDuplexBench, a benchmark for full duplex communication models, and on a new extension called ServiceDuplexBench for customer service scenarios.

FullDuplexBench measures chat power with Takeover Rate and latency metrics for tasks such as smooth takeover, handling user interruptions, handling latency, and backtracking. GPT-4o acts as an LLM judge for the quality of the response in the question answering fields. PersonaPlex achieves smooth transitions taking TOR 0.908 with latency 0.170 seconds and user disruption TOR 0.950 with latency 0.240 seconds. The speaker match between speech input and output in the setting under user interference uses WavLM TDNN embedding and reaches 0.650.

PersonaPlex outperforms many other open-source and closed-source systems in terms of chat dynamics, response latency, disruption latency, and engagement in both assistant and customer service roles.

Key Takeaways

- PersonaPlex-7B-v1 is a 7B parameter full duplex speech to speech model from NVIDIA, built on the Moshi architecture with the backbone of the Helium language model, code under MIT and weights under the NVIDIA Open Model License.

- The model uses a Dual stream Transformer with a Mimi encoder speech and a decoder at 24 kHz, encodes the continuous sound into separate tokens and generates text and audio tokens at the same time, allowing for entry, overlap, fast turn, and natural background channels.

- Human control is handled by mixed information, voice information made of audio tokens sets the timbre and style, text information and system information up to 200 tokens describe the role, business context, and constraints, with ready-made voice embeddings such as the NATF and NATM families.

- The training uses a combination of 7,303 Fisher conversations, about 1,217 hours, annotated with GPT-OSS-120B, and artificial conversations for assistants and customer service, about 410 hours and 1,840 hours, produced with Qwen3-32B and GPT-OSS-120B that separate TTS conversations and a naturalized task box.

- In FullDuplexBench and ServiceDuplexBench, PersonaPlex achieves a smooth take-up rate of 0.908 and a user interruption take-up rate of 0.950 with sub-second latency and improved performance retention.

Check it out Technical specifications, Model weights again Repo. Also, feel free to follow us Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the power of Artificial Intelligence for the benefit of society. His latest endeavor is the launch of Artificial Intelligence Media Platform, Marktechpost, which stands out for its extensive coverage of machine learning and deep learning stories that sound technically sound and easily understood by a wide audience. The platform boasts of more than 2 million monthly views, which shows its popularity among viewers.